Force Fields and Interactions

Overview

Teaching: 30 min

Exercises: 0 minQuestions

What is Molecular Dynamics and how can I benefit from using it?

What is a force field?

What mathematical energy terms are used in biomolecular force fields?

Objectives

Understand strengths and weaknesses of MD simulations

Understand how the interactions between particles in a molecular dynamics simulation are modeled

Introduction

Atoms and molecules, the building blocks of matter, interact with each other. They are attracted at long distances, but at short distances the interactions become strongly repulsive. As a matter of fact, there is no need to look for a proof that such interactions exist. Every day, we observe indirect results of these interactions with our own eyes. For example, because of the attraction between water molecules, they stick together to form drops, which then rain down to fill rivers. On the other hand, due to the strong repulsion, one liter of the same water always weighs about 1 kg, regardless of how much pressure we use to compress it.

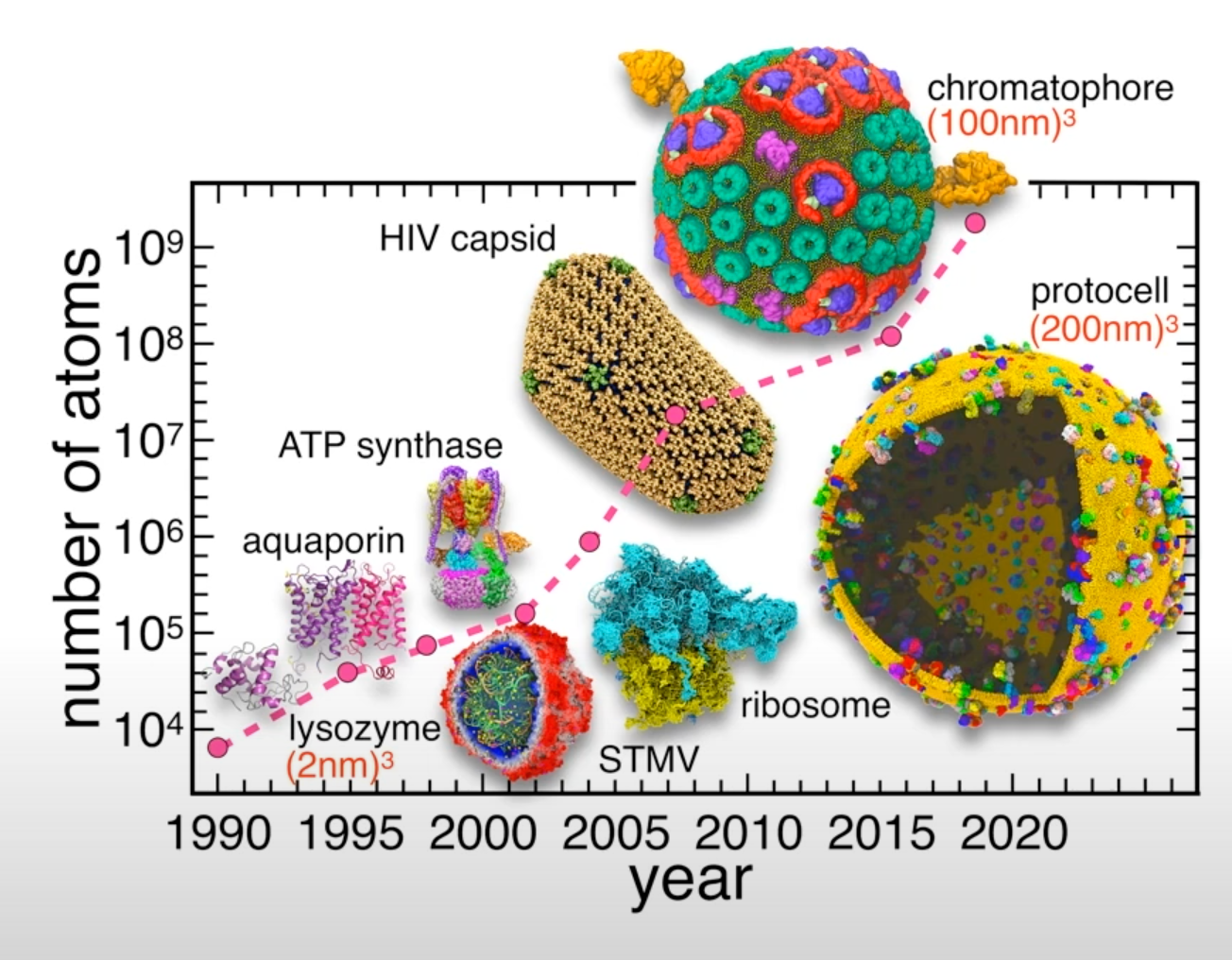

Nowadays, molecular modeling is done by analyzing dynamic structural models in computers. Historically, the modeling of molecules started long before the invention of computers. In the mid-1800’s structural models were suggested for molecules to explain their chemical and physical properties. First attempts at modeling interacting molecules were made by van der Waals in his pioneering work “About the continuity of the gas and liquid state” published in 1873. A simple equation representing molecules as spheres that are attracted to each other enabled him to predict the transition from gas to liquid. Modern molecular modeling and simulation still rely on many of the concepts developed over a century ago. Recently, MD simulations have been vastly improved in size and length. With longer simulations, we are able to study a broader range of problems under a wider range of conditions.

- Atoms and molecules interact with each other.

- We carry out molecular modeling by following and analyzing dynamic structural models in computers.

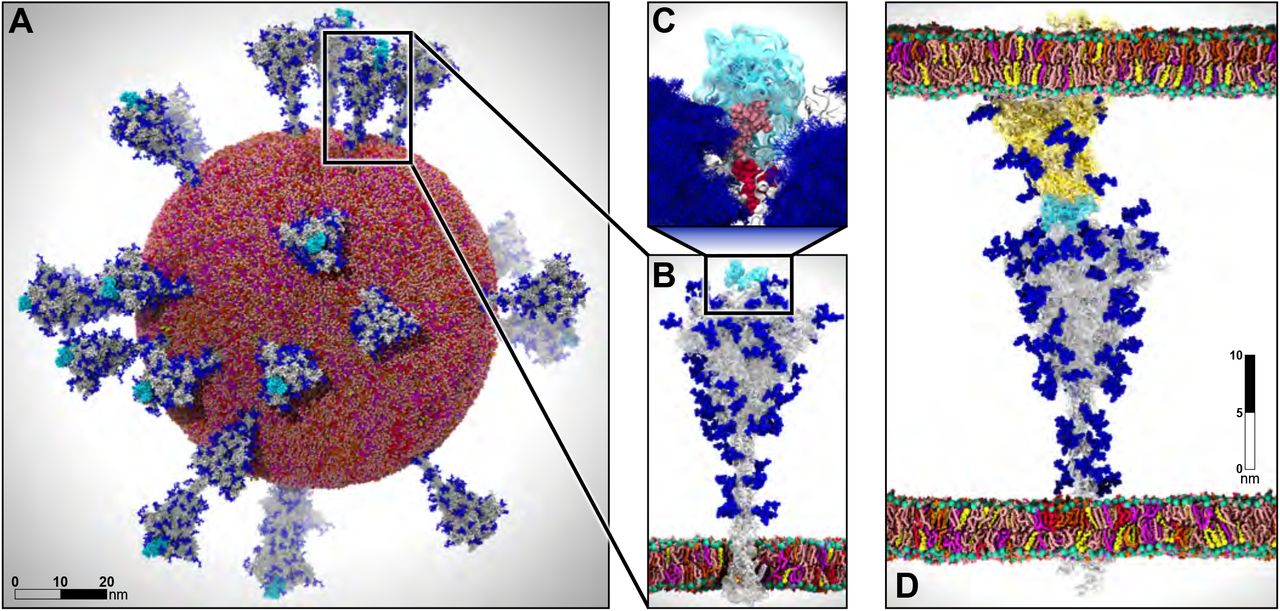

Figure from: AI-Driven Multiscale Simulations Illuminate Mechanisms of SARS-CoV-2 Spike Dynamics

Figure from: AI-Driven Multiscale Simulations Illuminate Mechanisms of SARS-CoV-2 Spike Dynamics

- The size and the length of MD simulations has been recently vastly improved.

- Longer and larger simulations allow us to tackle wider range of problems under a wide variety of conditions.

Recent example - simulation of the whole SARS-CoV-2 virion

One of the recent examples is simulation of the whole SARS-CoV-2 virion. The goal of this work was to understand how this virus infects cells. As a result of MD simulations, conformational transitions of the spike protein as well as its interactions with ACE2 receptors were identified. According to the simulation, the receptor binding domain of the spike protein, which is concealed from antibodies by polysaccharides, undergoes conformational transitions that allow it to emerge from the glycan shield and bind to the angiotensin-converting enzyme (ACE2) receptor in host cells.

The virion model included 305 million atoms, it had a lipid envelope of 75 nm in diameter with a full virion diameter of 120 nm. The multiscale simulations were performed using a combination of NAMD, VMD, AMBER. State-of-the-art methods such as the Weighted Ensemble Simulation Toolkit and ML were used. The whole system was simulated on the Summit supercomputer at Oak Ridge National Laboratory for a total time of 84 ns using NAMD. In addition a weighted ensemble of a smaller spike protein systems was simulated using GPU accelerated AMBER software.

This is one of the first works to explore how machine learning can be applied to MD studies. Using a machine learning algorithm trained on thousands of MD trajectory examples, conformational sampling was intelligently accelerated. As a result of applying machine learning techniques, it was possible to identify pathways of conformational transitions between active and inactive states of spike proteins.

- System size: 304,780,149 atoms, 350 Å × 350 Å lipid bilayer, simulation time 84 ns

Figure from AI-Driven Multiscale Simulations Illuminate Mechanisms of SARS-CoV-2 Spike Dynamics

Figure from AI-Driven Multiscale Simulations Illuminate Mechanisms of SARS-CoV-2 Spike Dynamics

- Showed that spike glycans can modulate the infectivity of the virus.

- Characterized interactions between the spike and the human ACE2 receptor.

- Used ML to identify conformational transitions between states and accelerate conformational sampling.

Goals

In this workshop, you will learn about molecular dynamics simulations and how to use different molecular dynamics simulation packages and utilities, such as NAMD, VMD, and AMBER. We will show you how to use Digital Research Alliance of Canada (Alliance) clusters for all steps of preparing the system, performing MD and analyzing the data. The emphasis will be on reproducibility and automation through scripting and batch processing.

- Introduce you to the method of molecular dynamics simulations.

- Guide you to using various molecular dynamics simulation packages and utilities.

- Teach how to use Digital Research Alliance of Canada (Alliance) clusters for system preparation, simulation and trajectory analysis.

The goal of this first lesson is to provide an overview of the theoretical foundation of molecular dynamics. As a result of this lesson, you will gain a deeper understanding of how particle interactions are modeled and how molecular dynamics are simulated.

The focus will be on reproducibility and automation by introducing scripting and batch processing.

The theory behind the method of MD.

Force Fields

The development of molecular dynamics was largely motivated by the desire to understand complex biological phenomena at the molecular level. To gain a deeper understanding of such processes, it was necessary to simulate large systems over a long period of time.

While the physical background of intermolecular interactions is known, there is a very complex mixture of quantum mechanical forces acting at a close distance. The forces between atoms and molecules arise from dynamic interactions between numerous electrons orbiting atoms. Since the interactions between electron clouds are so complex, they cannot be described analytically, nor can they be calculated numerically fast enough to enable a dynamic simulation on a relevant scale.

In order for molecular dynamics simulations to be feasible, it was necessary to be able to evaluate molecular interactions very quickly. To achieve this goal molecular interactions in molecular dynamics are approximated with a simple empirical potential energy function.

- Understanding complex biological phenomena requires simulations of large systems for a long time windows.

- The forces acting between atoms and molecules are very complex.

- Very fast method of evaluations molecular interactions is needed to achieve these goals.

Interactions are approximated with a simple empirical potential energy function.

The potential energy function U is a cornerstone of the MD simulations because it allows calculating forces. A force on an object is equal to the negative of the derivative of the potential energy function:

$\vec{F}=-\nabla{U}(\vec{r})$

Once we know forces acting on an object we can calculate how its position changes in time. To advance a simulation in time molecular dynamics applies classical Newton’s equation of motion stating that the rate of change of momentum \(\vec{p}\) of an object equals the force \(\vec{F}\) acting on it:

$ \vec{F}=\frac{d\vec{p}}{dt} $

To summarize, if we are able to determine a system’s potential energy, we can also determine the forces acting between the particles as well as how their positions change over time. In other words, we need to know the interaction potential for the particles in the system to calculate forces acting on atoms and advance a simulation.

- The potential energy function allows calculating forces: $ \vec{F}=-\nabla{U}(\vec{r}) $

- With the knowledge of the forces acting on an object, we can calculate how the position of that object changes over time: $ \vec{F}=\frac{d\vec{p}}{dt} $.

- Advance system with very small time steps assuming the velocities don’t change.

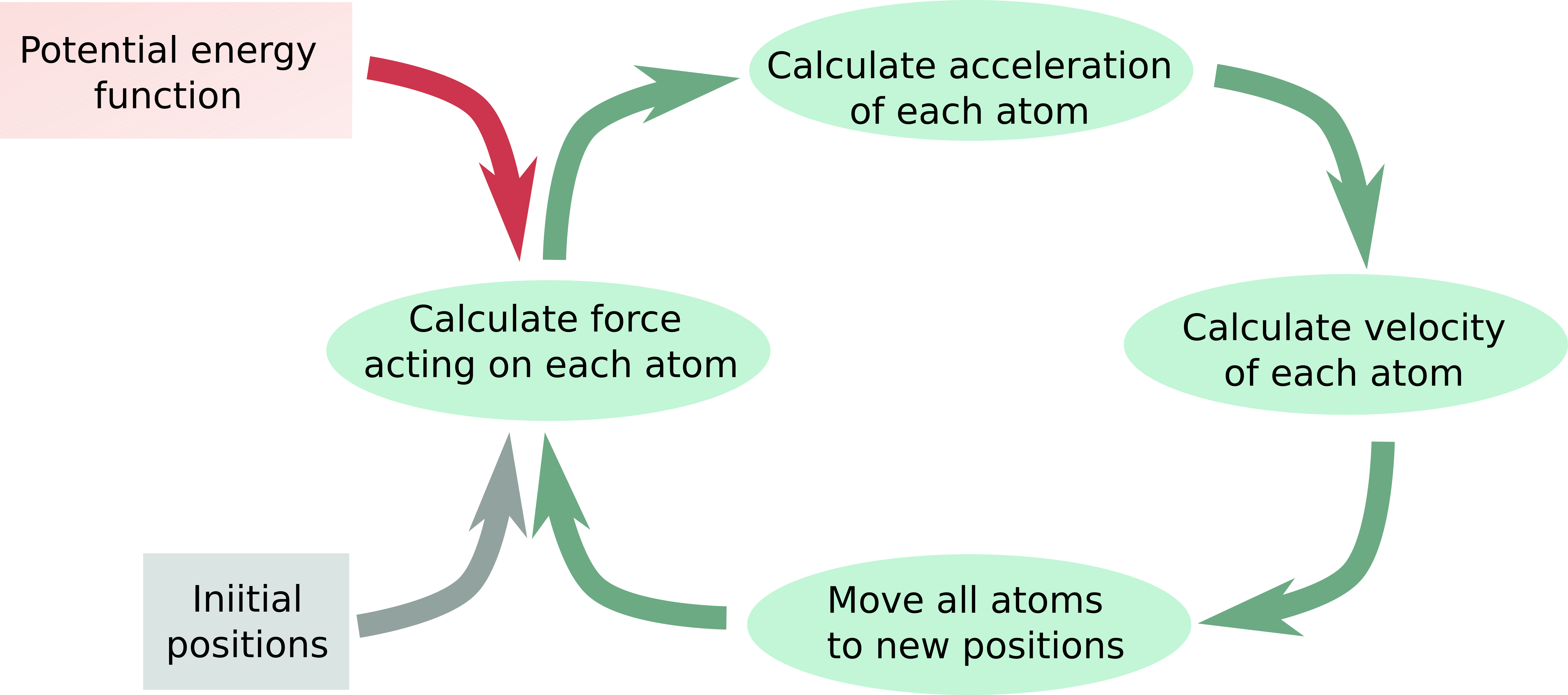

Let’s examine a typical workflow for simulations of molecular dynamics.

Flow diagram of MD simulation

Flow diagram of MD simulation

A force field is a set of empirical energy functions and parameters used to calculate the potential energy U as a function of the molecular coordinates.

Molecular dynamics programs use force fields to run simulations. A force field (FF) is a set of empirical energy functions and parameters allowing to calculate the potential energy U of a system of atoms and/or molecules as a function of the molecular coordinates. Classical molecular mechanics potential energy function used in MD simulations is an empirical function composed of non-bonded and bonded interactions:

- Potential energy function used in MD simulations is composed of non-bonded and bonded interactions:

$U(\vec{r})=\sum{U_{bonded}}(\vec{r})+\sum{U_{non-bonded}}(\vec{r})$

Typically MD simulations are limited to evaluating only interactions between pairs of atoms. In this approximation force fields are based on two-body potentials, and the energy of the whole system is described by the 2-dimensional force matrix of the pairwise interactions.

- Only pairwise interactions are considered.

Classification of force fields.

For convenience force fields can be divided into 3 general classes based on how complex they are.

Class 1 force fields.

In the class 1 force field dynamics of bond stretching and angle bending are described by simple harmonic motion, i.e. the magnitude of restoring force is assumed to be proportional to the displacement from the equilibrium position. As the energy of a harmonic oscillator is proportional to the square of the displacement, this approximation is called quadratic. In general, bond stretching and angle bending are close to harmonic only near the equilibrium. Higher-order anharmonic energy terms are required for a more accurate description of molecular motions. In the class 1 force field force matrix is diagonal because correlations between bond stretching and angle bending are omitted.

- Dynamics of bond stretching and angle bending is described by simple harmonic motion (quadratic approximation)

- Correlations between bond stretching and angle bending are omitted.

Examples: AMBER, CHARMM, GROMOS, OPLS

Class 2 force fields.

Class 2 force fields add anharmonic cubic and/or quartic terms to the potential energy for bonds and angles. Besides, they contain cross-terms describing the coupling between adjacent bonds, angles and dihedrals. Higher-order terms and cross terms allow for a better description of interactions resulting in a more accurate reproduction of bond and angle vibrations. However, much more target data is needed for the determination of these additional parameters.

- Add anharmonic cubic and/or quartic terms to the potential energy for bonds and angles.

- Contain cross-terms describing the coupling between adjacent bonds, angles and dihedrals.

Class 3 force fields.

Class 3 force fields explicitly add special effects of organic chemistry. For example polarization, stereoelectronic effects, electronegativity effect, Jahn–Teller effect, etc.

- Explicitly add special effects of organic chemistry such as polarization, stereoelectronic effects, electronegativity effect, Jahn–Teller effect, etc.

Examples of class 3 force fields are: AMOEBA, DRUDE

Energy Terms of Biomolecular Force Fields

What types of energy terms are used in Biomolecular Force Fields? For biomolecular simulations, most force fields are minimalistic class 1 force fields that trade off physical accuracy for the ability to simulate large systems for a long time. As we have already learned, potential energy function is composed of non-bonded and bonded interactions. Let’s have a closer look at these energy terms.

Non-Bonded Terms

The non-bonded potential terms describe non-electrostatic and electrostatic interactions between all pairs of atoms.

- Describe non-elecrostatic and electrostatic interactions between all pairs of atoms.

Non-electrostatic potential energy is most commonly described with the Lennard-Jones potential.

- Non-elecrostatic potential energy is most commonly described with the Lennard-Jones potential.

The Lennard-Jones potential

The Lennard-Jones (LJ) potential approximates the potential energy of non-electrostatic interaction between a pair of non-bonded atoms or molecules with a simple mathematical function:

- Approximates the potential energy of non-elecrostatic interaction between a pair of non-bonded atoms or molecules:

$V_{LJ}(r)=\frac{C12}{r^{12}}-\frac{C6}{r^{6}}$

The \(r^{-12}\) is used to approximate the strong Pauli repulsion that results from electron orbitals overlapping, while the \(r^{-6}\) term describes weaker attractive forces acting between local dynamically induced dipoles in the valence orbitals. While the attractive term is physically realistic (London dispersive forces have \(r^{-6}\) distance dependence), the repulsive term is a crude approximation of exponentially decaying repulsive interaction. The too steep repulsive part often leads to an overestimation of the pressure in the system.

- The \(r^{-12}\) term approximates the strong Pauli repulsion originating from overlap of electron orbitals.

- The \(r^{-6}\) term describes weaker attractive forces acting between local dynamically induced dipoles in the valence orbitals.

- The too steep repulsive part often leads to an overestimation of the pressure in the system.

The LJ potential is commonly expressed in terms of the well depth \(\epsilon\) (the measure of the strength of the interaction) and the van der Waals radius \(\sigma\) (the distance at which the intermolecular potential between the two particles is zero).

- The LJ potential is commonly expressed in terms of the well depth \(\epsilon\) and the van der Waals radius \(\sigma\):

$V_{LJ}(r)=4\epsilon\left[\left(\frac{\sigma}{r}\right)^{12}-\left(\frac{\sigma}{r}\right)^{6}\right]$

The LJ coefficients C are related to the \(\sigma\) and the \(\epsilon\) with the equations:

- Relation between C12, C6, \(\epsilon\) and \(\sigma\):

$C12=4\epsilon\sigma^{12},C6=4\epsilon\sigma^{6}$

Often, this potential is referred to as LJ-12-6. One of its drawbacks is that its 12th power repulsive part makes atoms too hard. Some forcefields, such as COMPASS, implement LJ-9-6 potential in order to address this problem. Atoms become softer by using repulsive terms of the 9th power, but they also become too sticky.

The Lennard-Jones Combining Rules

It is necessary to construct a matrix of the pairwise interactions in order to describe all LJ interactions in a simulation system. The LJ interactions between different types of atoms are computed by combining the LJ parameters. Different force fields use different combining rules. Using combining rules helps to avoid huge number of parameters for each combination of different atom types.

- The LJ interactions between different types of atoms are computed by combining the LJ parameters.

- Avoid huge number of parameters for each combination of different atom types.

- Different force fields use different combining rules.

Combination rules vary depending on the force field. Arithmetic and geometric means are the two most frequently used combination rules. There is little physical argument behind the geometric mean (Berthelot), while the arithmetic mean (Lorentz) is based on collisions between hard spheres.

- The arithmetic mean (Lorentz) is motivated by collision of hard spheres

- The geometric mean (Berthelot) has little physical argument.

Geometric mean:

\(C12_{ij}=\sqrt{C12_{ii}\times{C12_{jj}}}\qquad C6_{ij}=\sqrt{C6_{ii}\times{C6_{jj}}}\qquad\) (GROMOS)

\(\sigma_{ij}=\sqrt{\sigma_{ii}\times\sigma_{jj}}\qquad\qquad\qquad \epsilon_{ij}=\sqrt{\epsilon_{ii}\times\epsilon_{jj}}\qquad\qquad\) (OPLS)

Lorentz–Berthelot:

\(\sigma_{ij}=\frac{\sigma_{ii}+\sigma_{jj}}{2},\qquad \epsilon_{ij}=\sqrt{\epsilon_{ii}\times\epsilon_{jj}}\qquad\) (CHARM, AMBER).

This combining rule is a combination of the arithmetic mean for \(\sigma\) and the geometric mean for \(\epsilon\). It is known to overestimate the well depth

- Known issues: overestimates the well depth

Less common combining rules.

Waldman–Hagler:

\(\sigma_{ij}=\left(\frac{\sigma_{ii}^{6}+\sigma_{jj}^{6}}{2}\right)^{\frac{1}{6}}\) , \(\epsilon_{ij}=\sqrt{\epsilon_{ij}\epsilon_{jj}}\times\frac{2\sigma_{ii}^3\sigma_{jj}^3}{\sigma_{ii}^6+\sigma_{jj}^6}\)

This combining rule was developed specifically for simulation of noble gases.

Hybrid (the Lorentz–Berthelot for H and the Waldman–Hagler for other elements). Implemented in the AMBER-ii force field for perfluoroalkanes, noble gases, and their mixtures with alkanes.

The Buckingham potential

The Buckingham potential replaces the repulsive \(r^{-12}\) term in Lennard-Jones potential by exponential function of distance:

- Replaces the repulsive \(r^{-12}\) term in Lennard-Jones potential with exponential function of distance:

$V_{B}(r)=Aexp(-Br) -\frac{C}{r^{6}}$

Exponential function describes electron density more realistically but it is computationally more expensive to calculate. While using Buckingham potential there is a risk of “Buckingham Catastrophe”, the condition when at short-range electrostatic attraction artificially overcomes the repulsive barrier and collision between atoms occurs. This can be remedied by the addition of \(r^{-12}\) term.

- Exponential function describes electron density more realistically

- Computationally more expensive to calculate.

- Risk of “buckingham catastrophe” at short distances.

There is only one combining rule for Buckingham potential in GROMACS:

$A_{ij}=\sqrt{(A_{ii}A_{jj})}$

$B_{ij}=2/(\frac{1}{B_{ii}}+\frac{1}{B_{jj}})$

$C_{ij}=\sqrt{(C_{ii}C_{jj})}$

Combining rule (GROMACS):

\(A_{ij}=\sqrt{(A_{ii}A_{jj})} \qquad B_{ij}=2/(\frac{1}{B_{ii}}+\frac{1}{B_{jj}}) \qquad C_{ij}=\sqrt{(C_{ii}C_{jj})}\)

Specifying Combining Rules

GROMACS

Combining rule is specified in the [defaults] section of the forcefield.itp file (in the column ‘comb-rule’).

[ defaults ] ; nbfunc comb-rule gen-pairs fudgeLJ fudgeQQ 1 2 yes 0.5 0.8333Geometric mean is selected by using rules 1 and 3; Lorentz–Berthelot rule is selected using rule 2.

GROMOS force field requires rule 1; OPLS requires rule 3; CHARM and AMBER require rule 2

The type of potential function is specified in the ‘nbfunc’ column: 1 selects Lennard-Jones potential, 2 selects Buckingham potential.

NAMD

By default, Lorentz–Berthelot rules are used. Geometric mean can be turned on in the run parameter file:

vdwGeometricSigma yes

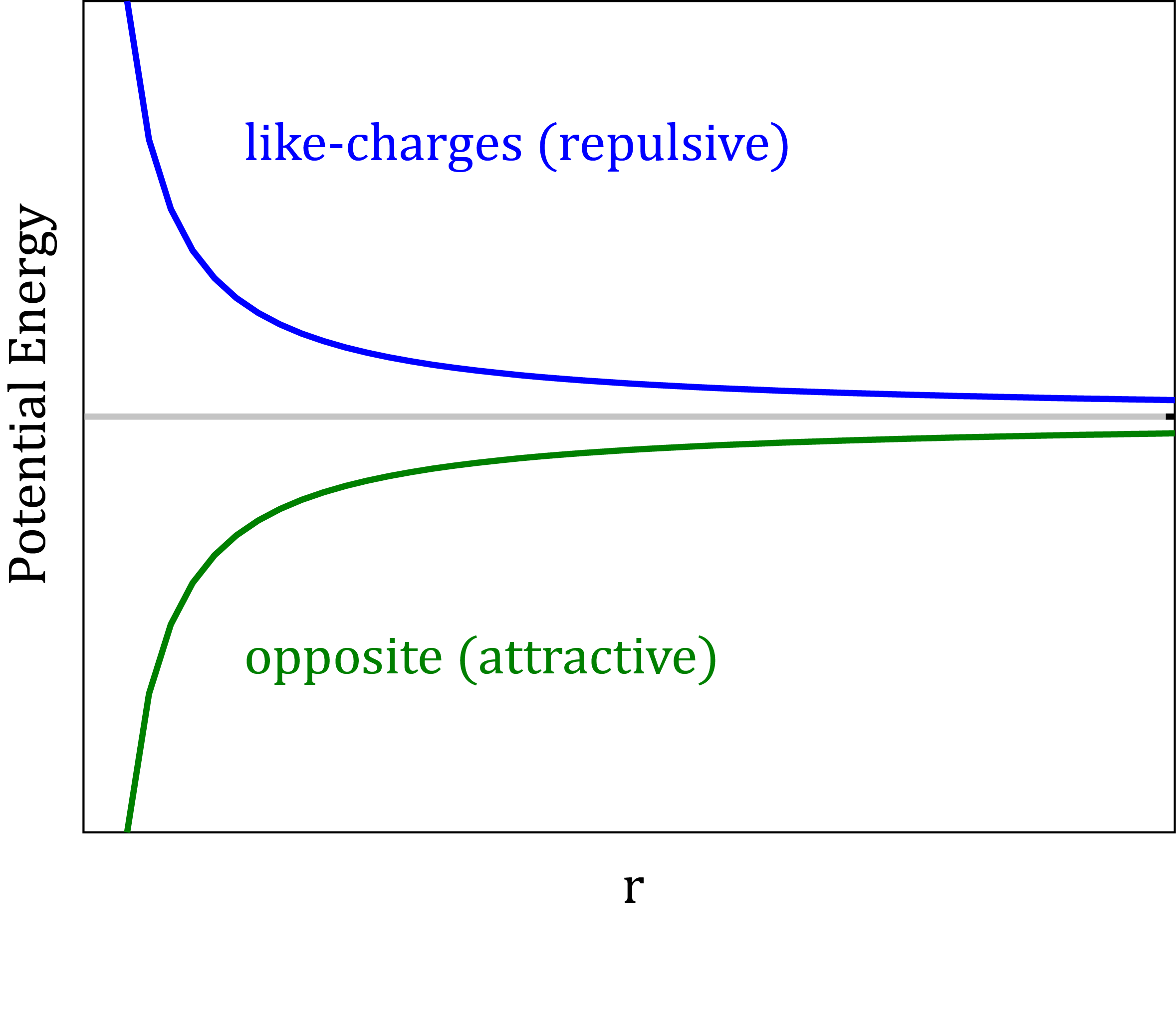

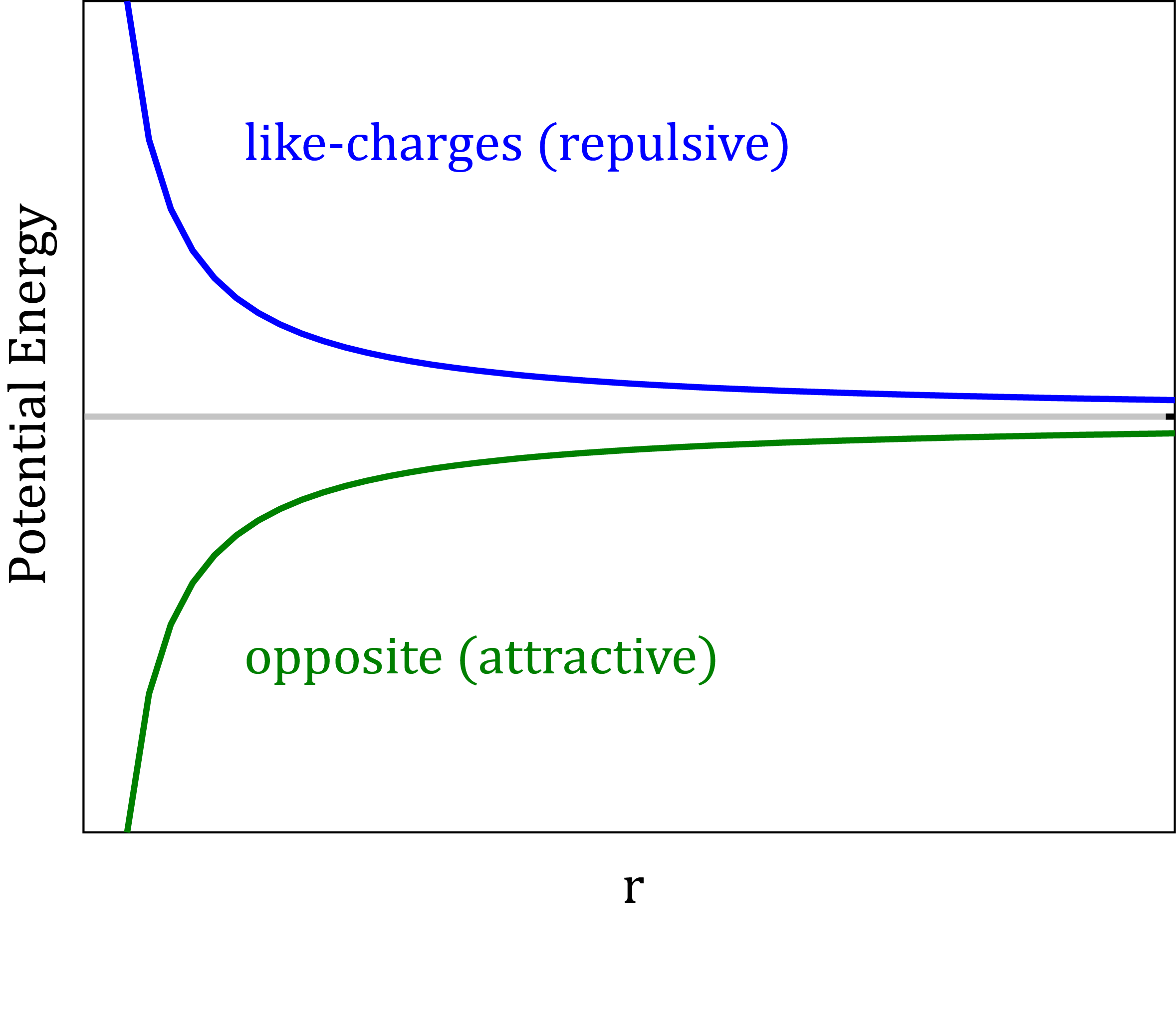

The electrostatic potential

To describe the electrostatic interactions in MD the point charges are assigned to the positions of atomic nuclei. The atomic charges are derived using QM methods with the goal to approximate the electrostatic potential around a molecule. The electrostatic potential is described with the Coulomb’s law:

$V_{Elec}=\frac{q_{i}q_{j}}{4\pi\epsilon_{0}\epsilon_{r}r_{ij}}$

where rij is the distance between the pair of atoms, qi and qj are the charges on the atoms i and j,\(\epsilon_{0}\) is the permittivity of vacuum. and \(\epsilon_{r}\) is the relative permittivity.

- Point charges are assigned to the positions of atomic nuclei to approximate the electrostatic potential around a molecule.

- The Coulomb’s law: $V_{Elec}=\frac{q_{i}q_{j}}{4\pi\epsilon_{0}\epsilon_{r}r_{ij}}$

Short-range and Long-range Interactions

Interactions can be classified as short-range and long-range. In a short-range interaction, the potential decreases faster than r-d, where r is the distance between the particles and d is the dimension. Otherwise the interaction is long-ranged. Accordingly, the Lennard-Jones interaction is short-ranged, while the Coulomb interaction is long-ranged.

- Interaction is short-range if the potential decreases faster than r-3

- The Lennard-Jones interactions are short-ranged, r-6.

- The Coulomb interactions are long-ranged, r-1.

Counting Non-Bonded Interactions

How many non-bonded interactions are in the system with ten Argon atoms? 10, 45, 90, or 200?

Solution

Argon atoms are neutral, so there is no Coulomb interaction. Atoms don’t interact with themselves and the interaction ij is the same as the interaction ji. Thus the total number of pairwise non-bonded interactions is (10x10 - 10)/2 = 45.

Bonded Terms

Bonded terms describe interactions between atoms within molecules. Bonded terms include several types of interactions, such as bond stretching terms, angle bending terms, dihedral or torsional terms, improper dihedrals, and coupling terms.

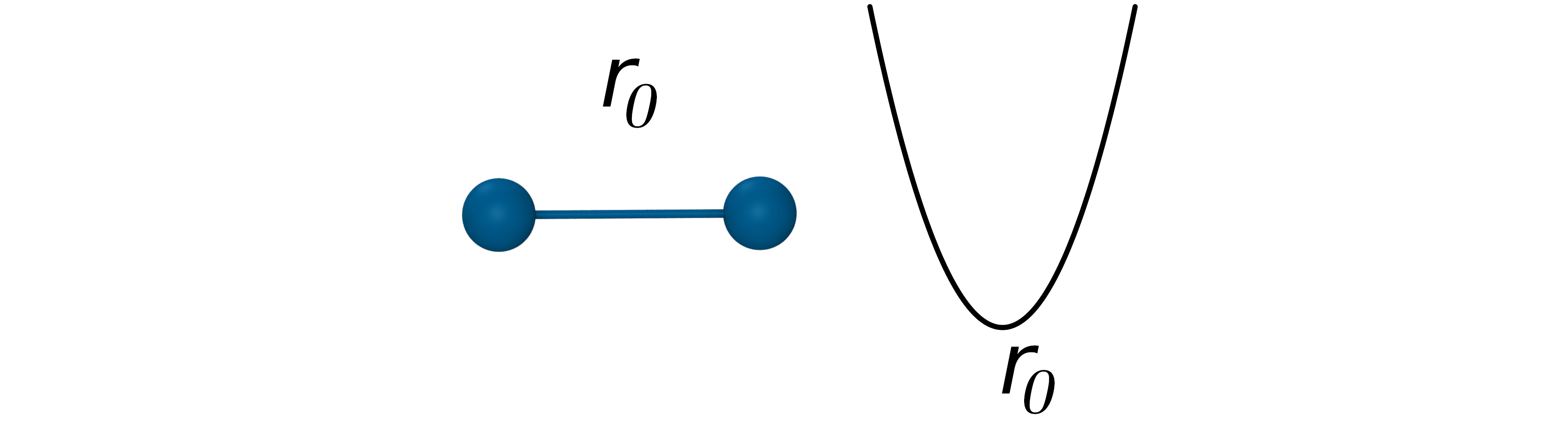

The bond potential

The bond potential is used to model the interaction of covalently bonded atoms in a molecule. Bond stretch is approximated by a simple harmonic function describing oscillation about an equilibrium bond length r0 with bond constant kb:

- Oscillation about an equilibrium bond length r0 with bond constant kb: $V_{Bond}=k_b(r_{ij}-r_0)^2$

$V_{Bond}=k_b(r_{ij}-r_0)^2$

In a bond, energy oscillates between the kinetic energy of the mass of the atoms and the potential energy stored in the spring connecting them.

This is a fairly poor approximation at extreme bond stretching, but bonds are so stiff that it works well for moderate temperatures. Morse potentials are more accurate, but more expensive to calculate because they involve exponentiation. They are widely used in spectroscopic applications.

- Poor approximation at extreme stretching, but it works well at moderate temperatures.

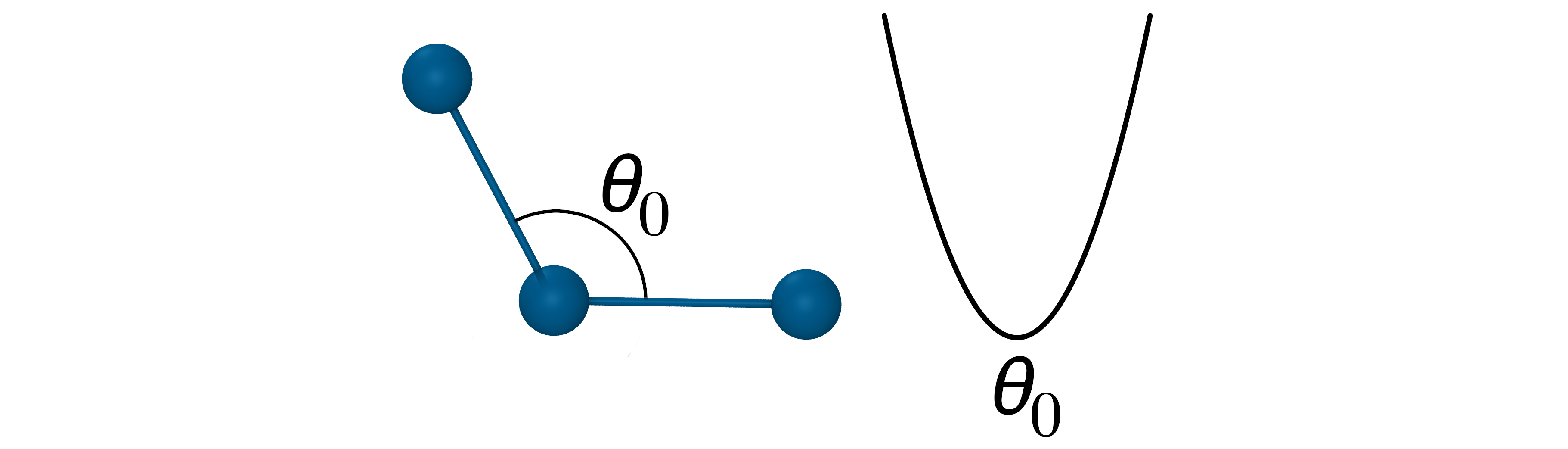

The angle potential

The angle potential describes the bond bending energy. It is defined for every triplet of bonded atoms. It is also approximated by a harmonic function describing oscillation about an equilibrium angle \(\theta_{0}\) with force constant \(k_\theta\) :

- Oscillation about an equilibrium angle \(\theta_{0}\) with force constant \(k_\theta\): $V_{Angle}=k_\theta(\theta_{ijk}-\theta_0)^2$

$V_{Angle}=k_\theta(\theta_{ijk}-\theta_0)^2$

The force constants for angle potential are about 5 times smaller that for bond stretching.

- The force constants for angle potential are about 5 times smaller that for bond stretching.

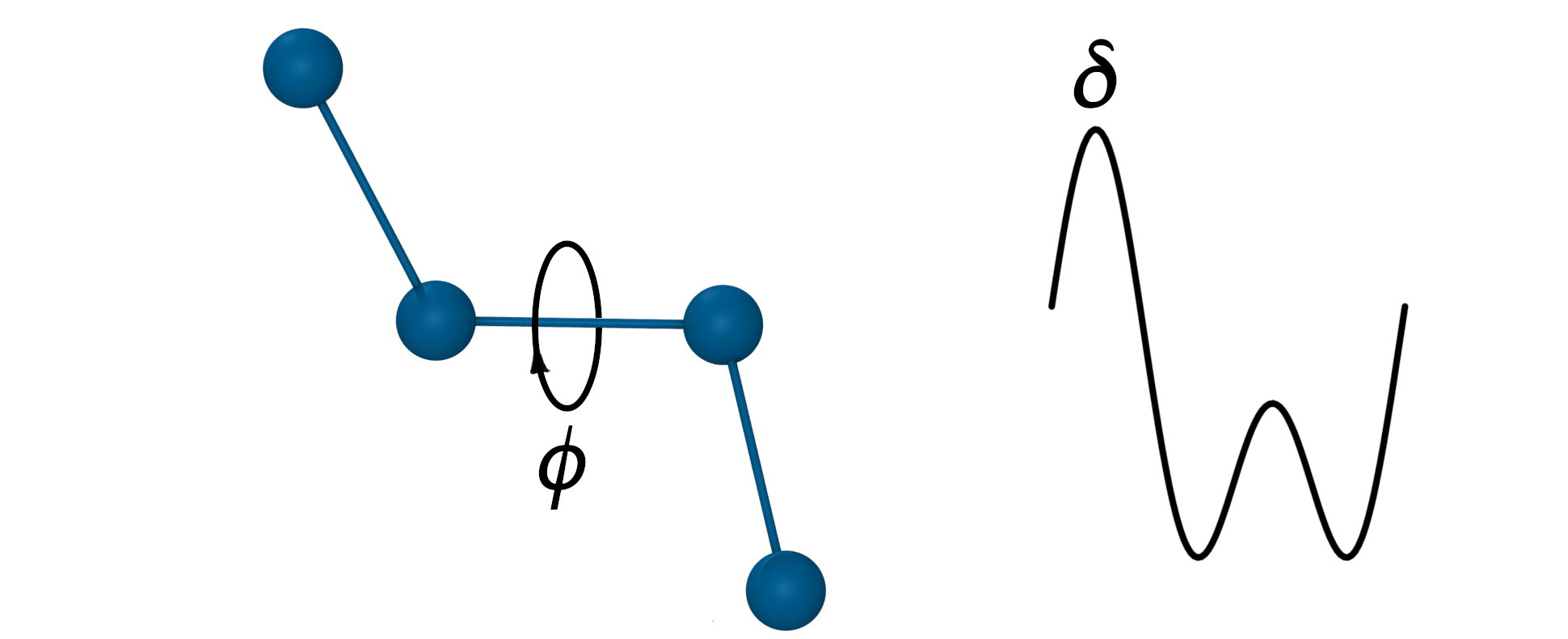

The torsion (dihedral) angle potential

The torsion energy is defined for every 4 sequentially bonded atoms. The torsion angle \(\phi\) is the angle of rotation about the covalent bond between the middle two atoms and the potential is given by:

- Defined for every 4 sequentially bonded atoms.

- Sum of any number of periodic functions, n - periodicity, \(\delta\) - phase shift angle.

$V_{Dihed}=k_\phi(1+cos(n\phi-\delta)) + …$

Where the non-negative integer constant n defines periodicity and \(\delta\) is the phase shift angle.

- n represents the number of potential maxima or minima generated in a 360° rotation.

Dihedrals of multiplicity of n=2 and n=3 can be combined to reproduce energy differences of cis/trans and trans/gauche conformations. The example above shows the torsion potential of ethylene glycol.

- Combination of n=2 and n=3 dihedrals used to reproduce cis/trans and trans/gauche energy differences in ethylene glycol

The improper torsion potential

Improper torsion potentials are defined for groups of 4 bonded atoms where the central atom i is connected to the 3 peripheral atoms j,k, and l. Such group can be seen as a pyramid and the improper torsion potential is related to the distance of the central atom from the base of the pyramid. This potential is used mainly to keep molecular structures planar. As there is only one energy minimum the improper torsion term can be given by a harmonic function:

- Also known as ‘out-of-plane bending’

- Defined for a group of 4 bonded atoms where the central atom i is connected to the 3 peripheral atoms j,k, and l.

- Used to enforce planarity.

- Given by a harmonic function: $V_{Improper}=k_\phi(\phi-\phi_0)^2$

$V_{Improper}=k_\phi(\phi-\phi_0)^2$

Where the dihedral angle \(\phi\) is the angle between planes ijk and ijl.

- The dihedral angle \(\phi\) is the angle between planes ijk and ijl.

Coupling Terms

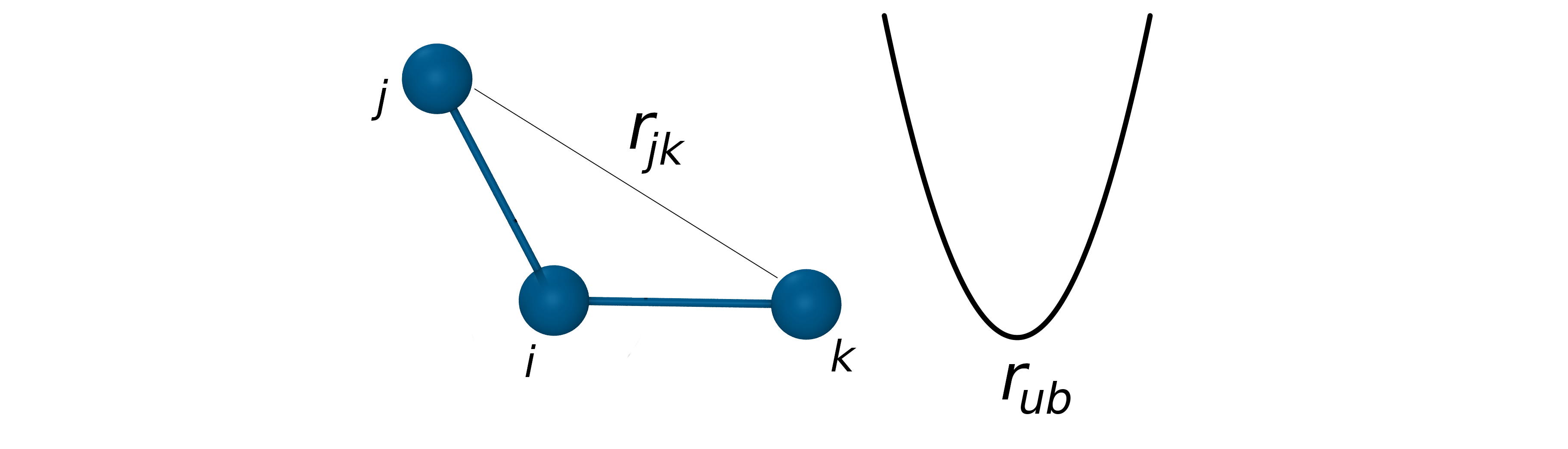

The Urey-Bradley potential

It is known that as a bond angle is decreased, the adjacent bonds stretch to reduce the interaction between the outer atoms of the bonded triplet. This means that there is a coupling between bond length and bond angle. This coupling can be described by the Urey-Bradley potential. The Urey-Bradley term is defined as a (non-covalent) spring between the outer i and k atoms of a bonded triplet ijk. It is approximated by a harmonic function describing oscillation about an equilibrium distance rub with force constant kub:

- Coupling between bond length and bond angle is described by the Urey-Bradley potential.

- The Urey-Bradley term is defined as a spring between the outer atoms of a bonded triplet.

- Approximated by a harmonic function: $V_{UB}=k_{ub}(r_{jk}-r_{ub})^2$

$V_{UB}=k_{ub}(r_{ik}-r_{ub})^2$

U-B terms are used to improve agreement with vibrational spectra when a harmonic bending term alone would not adequately fit. These phenomena are largely inconsequential for the overall conformational sampling in a typical biomolecular/organic simulation. The Urey-Bradley term is implemented in the CHARMM force fields.

- Improve agreement with vibrational spectra.

- Do not affect overall conformational sampling.

- Implemented in CHARMM and AMOEBA force fields.

CHARMM CMAP correction potential

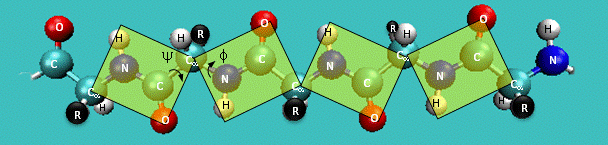

A protein can be seen as a series of linked sequences of peptide units which can rotate around phi/psi angles (peptide bond N-C is rigid). These phi/psi angles define the conformation of the backbone.

- Peptide torsion angles: phi, psi, omega.

- A protein can be seen as a series of linked sequences of peptide units which can rotate around phi/psi angles.

- phi/psi angles define the conformation of the backbone.

phi/psi dihedral angle potentials correct for force field deficiencies such as errors in non-bonded interactions, electrostatics, lack of coupling terms, inaccurate combination, etc.

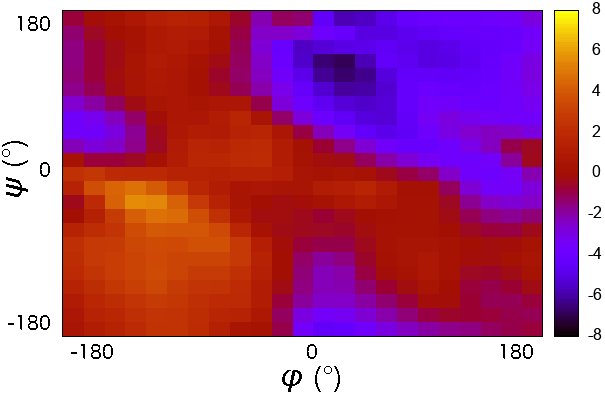

CMAP potential is a correction map to the backbone dihedral energy. It was developed to improve the sampling of backbone conformations. CMAP parameter does not define a continuous function. it is a grid of energy correction factors defined for each pair of phi/psi angles typically tabulated with 15 degree increments.

- phi/psi dihedral angle potentials correct for force field deficiencies such as errors in non-bonded interactions, electrostatics, lack of coupling terms, inaccurate combination, etc.

- CMAP potential was developed to improve the sampling of backbone conformations.

- CMAP parameter does not define a continuous function.

- it is a grid of energy correction factors defined for each pair of phi/psi angles typically tabulated with 15 degree increments.

The grid of energy correction factors is constructed using QM data for every combination of \(\phi/\psi\) dihedral angles of the peptide backbone and further optimized using empirical data.

CMAP potential was initially applied to improve CHARMM22 force field. CMAP corrections were later implemented in AMBER force fields ff99IDPs (force field for intrinsically disordered proteins), ff12SB-cMAP (force field for implicit-solvent simulations), and ff19SB.

Energy scale of potential terms

| \(k_BT\) at 298 K | ~ 0.593 | \(\frac{kcal}{mol}\) |

| Bond vibrations | ~ 100 ‐ 500 | \(\frac{kcal}{mol \cdot \unicode{x212B}^2}\) |

| Bond angle bending | ~ 10 - 50 | \(\frac{kcal}{mol \cdot deg^2}\) |

| Dihedral rotations | ~ 0 - 2.5 | \(\frac{kcal}{mol \cdot deg^2}\) |

| van der Waals | ~ 0.5 | \(\frac{kcal}{mol}\) |

| Hydrogen bonds | ~ 0.5 - 1.0 | \(\frac{kcal}{mol}\) |

| Salt bridges | ~ 1.2 - 2.5 | \(\frac{kcal}{mol}\) |

Exclusions from Non-Bonded Interactions

Pairs of atoms connected by chemical bonds are normally excluded from computation of non-bonded interactions because bonded energy terms replace non-bonded interactions. In biomolecular force fields all pairs of connected atoms separated by up to 2 bonds (1-2 and 1-3 pairs) are excluded from non-bonded interactions.

Computation of the non-bonded interaction between 1-4 pairs depends on the specific force field. Some force fields exclude VDW interactions and scale down electrostatic (AMBER) while others may modify both or use electrostatic as is.

- In pairs of atoms connected by chemical bonds bonded energy terms replace non-bonded interactions.

- All pairs of connected atoms separated by up to 2 bonds (1-2 and 1-3 pairs) are excluded from non-bonded interactions. It is assumed that they are properly described with bond and angle potentials.

The 1-4 interaction turns out to be an intermediate case where both bonded and non-bonded interactions are required for a reasonable description. Due to the short distance between the 1–4 atoms full strength non-bonded interactions are too strong, and in most cases lack fine details of local internal conformational degrees of freedom. To address this problem in many cases a compromise is made to treat this particular pair partially as a bonded and partially as a non-bonded interaction.

Non-bonded interactions between 1-4 pairs depends on the specific force field. Some force fields exclude VDW interactions and scale down electrostatic (AMBER) while others may modify both or use electrostatic as is.

- 1-4 interaction represents a special case where both bonded and non-bonded interactions are required for a reasonable description. However, due to the short distance between the 1–4 atoms full strength non-bonded interactions are too strong and must be scaled down.

- Non-bonded interaction between 1-4 pairs depends on the specific force field.

- Some force fields exclude VDW interactions and scale down electrostatic (AMBER) while others may modify both or use electrostatic as is.

What Information Can MD Simulations Provide?

With the help of MD it is possible to model phenomena that cannot be studied experimentally. For example

- Understand atomistic details of conformational changes, protein unfolding, interactions between proteins and drugs

- Study thermodynamics properties (free energies, binding energies)

- Study biological processes such as (enzyme catalysis, protein complex assembly, protein or RNA folding, etc).

For more examples of the types of information MD simulations can provide read the review article: Molecular Dynamics Simulation for All.

Specifying Exclusions

GROMACS

The exclusions are generated by grompp as specified in the [moleculetype] section of the molecular topology .top file:

[ moleculetype ] ; name nrexcl Urea 3 ... [ exclusions ] ; ai aj 1 2 1 3 1 4In the example above non-bonded interactions between atoms that are no farther than 3 bonds are excluded (nrexcl=3). Extra exclusions may be added explicitly in the [exclusions] section.

The scaling factors for 1-4 pairs, fudgeLJ and fudgeQQ, are specified in the [defaults] section of the forcefield.itp file. While fudgeLJ is used only when gen-pairs is set to ‘yes’, fudgeQQ is always used.

[ defaults ] ; nbfunc comb-rule gen-pairs fudgeLJ fudgeQQ 1 2 yes 0.5 0.8333NAMD

Which pairs of bonded atoms should be excluded is specified by the exclude parameter.

Acceptable values: none, 1-2, 1-3, 1-4, or scaled1-4exclude scaled1-4 1-4scaling 0.83 scnb 2.0If scaled1-4 is set, the electrostatic interactions for 1-4 pairs are multiplied by a constant factor specified by the 1-4scaling parameter. The LJ interactions for 1-4 pairs are divided by scnb.

Key Points

Molecular dynamics simulates atomic positions in time using forces acting between atoms

The forces in molecular dynamics are approximated by simple empirical functions

Fast Methods to Evaluate Forces

Overview

Teaching: 30 min

Exercises: 0 minQuestions

Why the computation of non-bonded interactions is the speed limiting factor?

How are non-bonded interactions computed efficiently?

What is a cutoff distance?

How to choose the appropriate cutoff distance?

Objectives

Understand how non-bonded interactions are truncated.

Understand how a subset of particles for calculation of short-range non-bonded is selected.

Learn how to choose the appropriate cutoff distance and truncation method.

Learn how to select cutoff distance and truncation method in GROMACS and NAMD.

Challenges in calculation of non bonded interactions.

The most computationally demanding part of a molecular dynamics simulation is the calculation of the non-bonded terms of the potential energy function. Since the non-bonded energy terms between every pair of atoms must be evaluated, the number of calculations increases as the square of the number of atoms. In order to speed up the computation, only interactions between atoms separated by less than a preset cutoff distance are considered.

It is essential to use an efficient way of excluding pairs of atoms separated by a long distance in order to accelerate force computation. Such methods are known as “neighbour searching methods”.

- The number of non-bonded interactions increases as the square of the number of atoms.

- The most computationally demanding part of a molecular dynamics simulation is the calculation of the non-bonded interactions.

- Simulation can be significantly accelerated by limiting the number of evaluated non bonded interactions.

- Exclude pairs of atoms separated by long distance.

- Maintain a list of all particles within a predefined cutoff distance of each other.

Neighbour Searching Methods

The search for pairs of particles that are needed for calculation of the short-range non-bonded interactions is usually accelerated by maintaining a list of all particles within a predefined cutoff distance of each other.

Particle neighbours are determined either by dividing the simulation system into grid cells (cell lists) or by constructing a neighbour list for each particle (Verlet lists).

- Divide simulation system into grid cells - cell lists.

- Compile a list of neighbors for each particle by searching all pairs - Verlet lists.

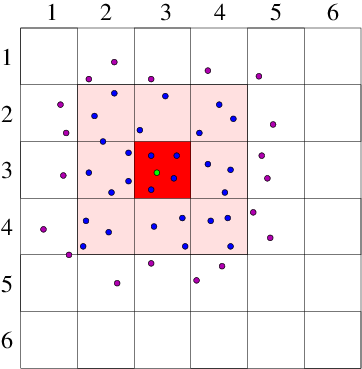

Cell Lists

The cell lists method divides the simulation domain into n cells with edge length greater or equal to the cutoff radius of the interaction to be computed. With this cell size, all particles within the cutoff will be considered. The interaction potential for each particle is then computed as the sum of the pairwise interactions between the current particle and all other particles in the same cell plus all particles in the neighbouring cells (26 cells for 3-dimensional simulation).

- Divide the simulation domain into cells with edge length greater or equal to the cutoff distance.

- Interaction potential of a particle is the sum of the pairwise interactions with all other particles in the same cell and all neighboring cells.

Verlet Lists

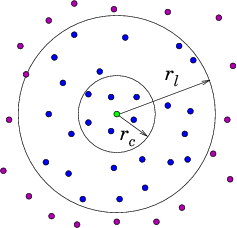

A Verlet list stores all particles within the cutoff distance of every particle plus some extra buffer distance. Although all pairwise distances must be evaluated to construct the Verlet list, it can be used for several consecutive time steps until any particle has moved more than half of the buffer distance. At this point, the list is invalidated and the new list must be constructed.

- Verlet list stores all particles within the cutoff distance plus some extra buffer distance.

- All pairwise distances must be evaluated.

- List is valid until any particle has moved more than half of the buffer distance.

Verlet offers more efficient computation of pairwise interactions at the expense of relatively large memory requirement which can be a limiting factor.

In practice, almost all simulations are run in parallel and use a combination of spatial decomposition and Verlet lists.

- Efficient computation of pairwise interactions.

- Relatively large memory requirement.

- In practice, almost all simulations use a combination of spatial decomposition and Verlet lists.

Problems with Truncation of Lennard-Jones Interactions and How to Avoid Them?

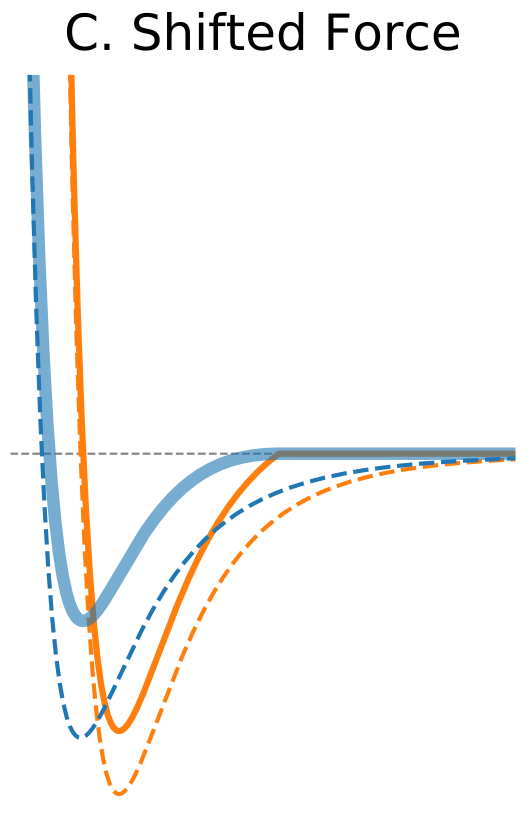

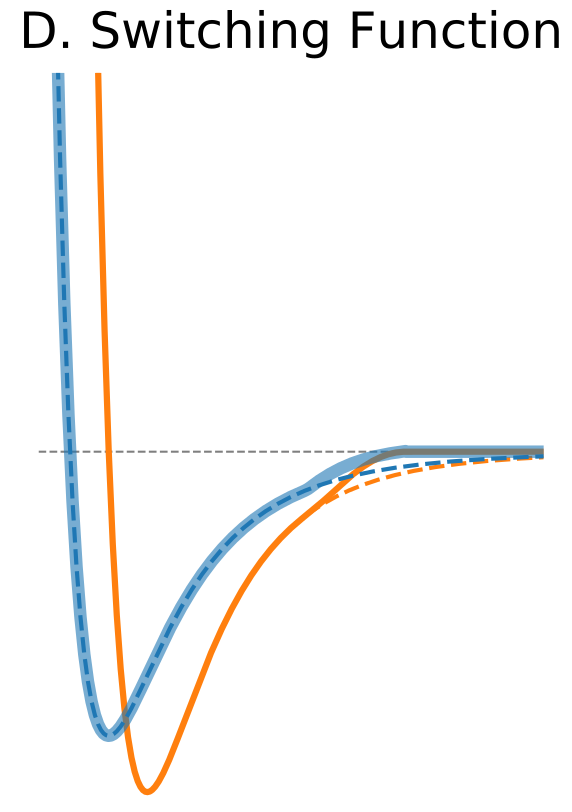

We have learned that the LJ potential is always truncated at the cutoff distance. A cutoff introduces a discontinuity in the potential energy at the cutoff value. As forces are computed by differentiating potential, a sharp difference in potential may result in nearly infinite forces at the cutoff distance (Figure 1A). There are several approaches to minimize the impact of the cutoff.

- LJ potential is always truncated at the cutoff distance.

- Truncation introduces a discontinuity in the potential energy.

- A sharp change in potential may result in nearly infinite forces.

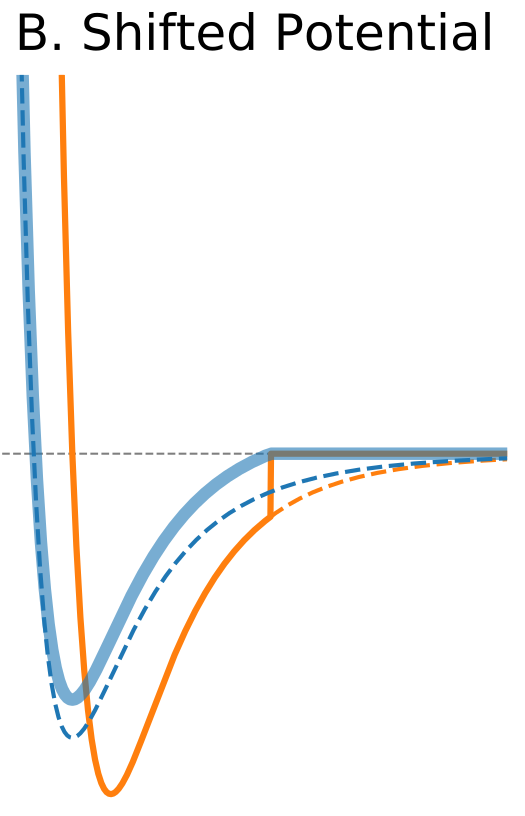

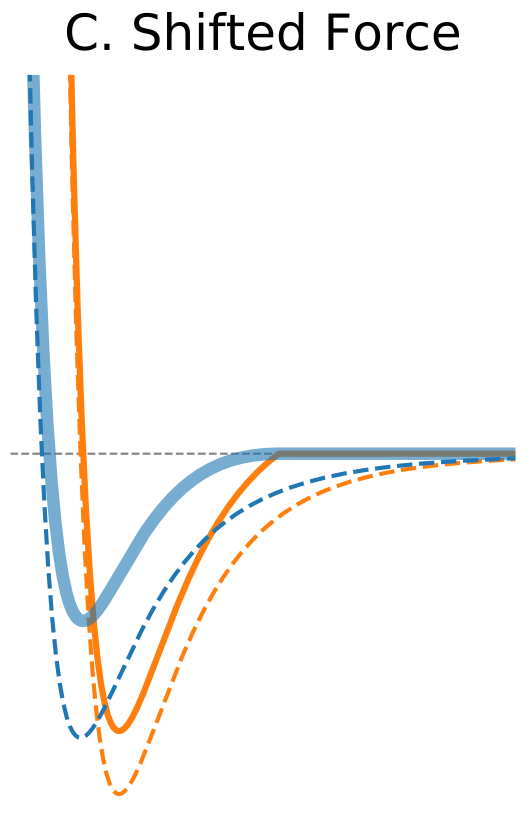

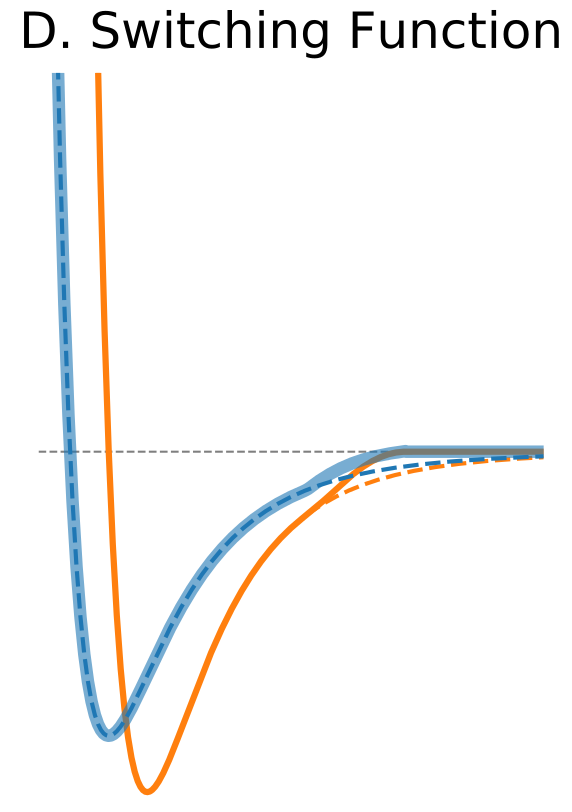

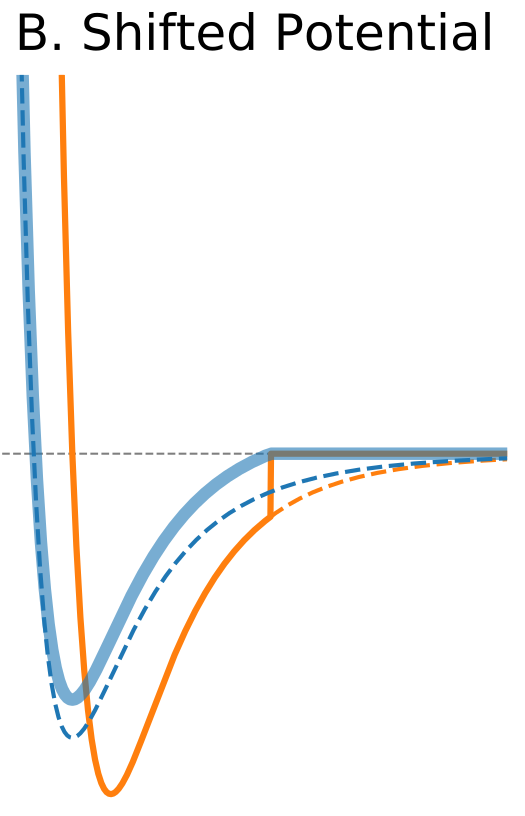

Figure 1. The Distance Dependence of Potential and Force for Different Truncation Methods

| Shifted potential | |

The standard solution is to shift the whole potential uniformly by adding a constant at values below cutoff (shifted potential method, Figure 1B). This ensures continuity of the potential at the cutoff distance and avoids infinite forces. The addition of a constant term does not change forces at the distances below cutoff because it disappears when the potential is differentiated. However, it introduces a discontinuity in the force at the cutoff distance. Particles will experience sudden un-physical acceleration when other particles cross their respective cutoff distance. Another drawback is that when potential is shifted the total potential energy changes.</div> |

|

| Shifted Force | |

One way to address discontinuity in forces is to shift the whole force so that it vanishes at the cutoff distance (Figure 1C). As opposed to the potential shift method the shifted forces cutoff modifies equations of motion at all distances. Nevertheless, the shifted forces method has been found to yield better results at shorter cutoff values compared to the potential shift method (Toxvaerd, 2011). |

|

| Switching Function | |

Another solution is to modify the shape of the potential function near the cutoff boundary to truncate the non-bonded interaction smoothly at the cutoff distance. This can be achieved by the application of a switching function, for example, polynomial function of the distance. If the switching function is applied the switching parameter specifies the distance at which the switching function starts to modify the LJ potential to bring it to zero at the cutoff distance. The advantage is that the forces are modified only near the cutoff boundary and they approach zero smoothly. |

|

| Shifted potential | |

$\circ$ Shift the whole potential uniformly by adding a constant at values below cutoff. $\circ$ Avoids infinite forces. $\circ$ Does not change forces at the distances below cutoff. $\circ$ Introduces a discontinuity in the force at the cutoff distance. $\circ$ Modifies total potential energy. |

|

| Shifted Force | |

$\circ$ Shift the whole force so that it vanishes at the cutoff distance. $\circ$ Modifies equations of motion at all distances. $\circ$ Better results at shorter cutoff values compared to the potential shift. |

|

| Switching Function | |

$\circ$ Modify the shape of the potential function near cutoff. $\circ$ Forces are modified only near the cutoff boundary and they approach zero smoothly. |

|

How to Choose the Appropriate Cutoff Distance?

A common practice is to truncate at 2.5 \(\sigma\) and this practice has become a minimum standard for truncation. At this distance, the LJ potential is about 1/60 of the well depth \(\epsilon\), and it is assumed that errors arising from this truncation are small enough. The dependence of the cutoff on \(\sigma\) means that the choice of the cutoff distance depends on the force field and atom types used in the simulation.

- A common practice is to truncate at 2.5 \(\sigma\).

- At this distance, the LJ potential is about 1/60 of the well depth \(\epsilon\).

- The choice of the cutoff distance depends on the force field and atom types.

For example for the O, N, C, S, and P atoms in the AMBER99 force field the values of \(\sigma\) are in the range 1.7-2.1, while for the Cs ions \(\sigma=3.4\). Thus the minimum acceptable cutoff, in this case, is 8.5.

In practice, increasing cutoff does not necessarily improve accuracy. There are documented cases showing opposite tendency (Yonetani, 2006). Each force field has been developed using a certain cutoff value, and effects of the truncation were compensated by adjustment of some other parameters. If you use cutoff 14 for the force field developed with the cutoff 9, then you cannot claim that you used this original forcefield. To ensure consistency and reproducibility of simulations, you should choose a cutoff that is appropriate for the force field.

- Increasing cutoff does not necessarily improve accuracy.

- Each force field has been developed using a certain cutoff value, and effects of the truncation were compensated by adjustment of some other parameters.

- To ensure consistency and reproducibility of simulation you should choose the cutoff appropriate for the force field:

Table 1. Cutoffs Used in Development of the Common Force Fields

| AMBER | CHARMM | GROMOS | OPLS |

|---|---|---|---|

| 8 Å, 10 Å (ff19SB) | 12 Å | 14 Å | 11-15 Å (depending on a molecule size) |

Properties that are very sensitive to the choice of cutoff

Different molecular properties are affected differently by various cutoff approximations. Examples of properties that are very sensitive to the choice of cutoff include the surface tension (Ahmed, 2010), the solid–liquid coexistence line (Grosfils, 2009), the lipid bi-layer properties (Huang, 2014), and the structural properties of proteins (Piana, 2012).

- the surface tension (Ahmed, 2010),

- the solid–liquid coexistence line (Grosfils, 2009),

- the lipid bi-layer properties (Huang, 2014),

- the structural properties of proteins (Piana, 2012).

For such quantities even a cutoff at 2.5 \(\sigma\) gives inaccurate results, and in some cases the cutoff must be larger than 6 \(\sigma\) was required for reliable simulations (Grosfils, 2009).

Effect of cutoff on energy conservation

Cutoff problems are especially pronounced when energy conservation is required. The net effect could be an increase in the temperature of the system over time. The best practice is to carry out trial simulations of the system under study without temperature control to test it for energy leaks or sources before a production run.

- Short cutoff may lead to an increase in the temperature of the system over time.

- The best practice is to carry out trial simulations without temperature control to test it for energy leaks or sources before a production run.

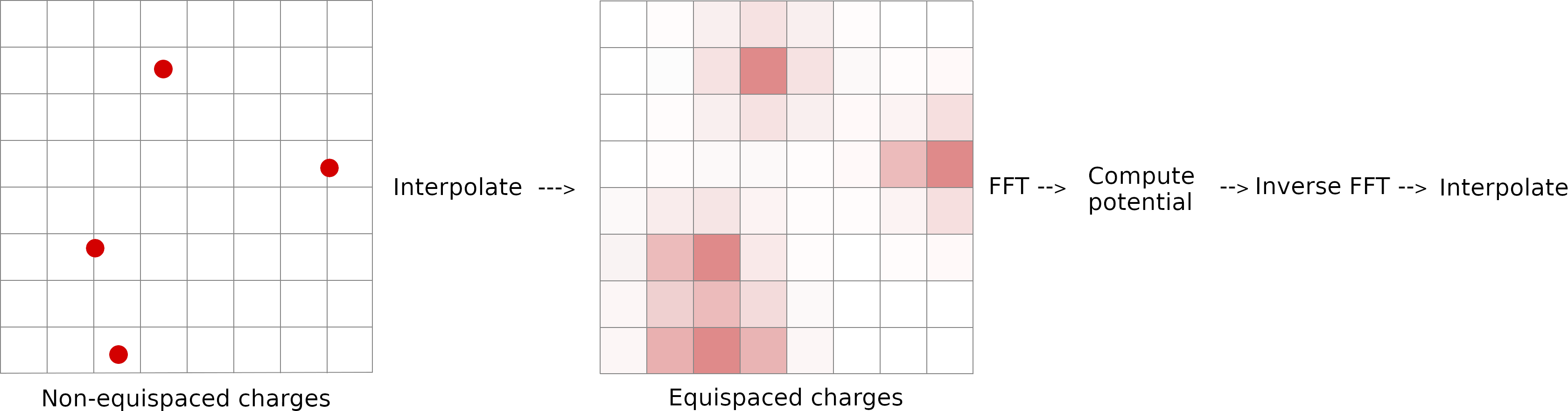

Truncation of the Electrostatic Interactions

Electrostatic interactions occurring over long distances are known to be important for biological molecules. Electrostatic interactions decay slowly and simple increase of the cutoff distance to account for long-range interactions can dramatically raise the computational cost. In periodic simulation systems, the most commonly used method for calculation of long-range electrostatic interactions is particle-mesh Ewald. In this method, the electrostatic interaction is divided into two parts: a short-range contribution, and a long-range contribution. The short-range contribution is calculated by exact summation of all pairwise interactions of atoms separated by a distance that is less than cutoff in real space. The forces beyond the cutoff radius are approximated on the grid in Fourier space commonly by the Particle-Mesh Ewald (PME) method.

- The electrostatic interaction is divided into two parts: a short-range and a long-range.

- The short-range contribution is calculated by exact summation.

- The forces beyond the cutoff radius are approximated using Particle-Mesh Ewald (PME) method.

Selecting Neighbour Searching Methods

GROMACS

Neighbour searching is specified in the run parameter file mdp.

cutoff-scheme = group ; Generate a pair list for groups of atoms. Since version 5.1 group list has been deprecated and only Verlet scheme is available. cutoff-scheme = Verlet ; Generate a pair list with buffering. The buffer size is automatically set based on verlet-buffer-tolerance, unless this is set to -1, in which case rlist will is used. ; Neighbour search method. ns-type = grid ; Make a grid in the box and only check atoms in neighboring grid cells. ns-type = simple ; Loop over every atom in the box. nstlist = 5 ; Frequency to update the neighbour list. If set to 0 the neighbour list is constructed only once and never updated. The default value is 10.NAMD

When run in parallel NAMD uses a combination of spatial decomposition into grid cells (patches) and Verlet lists with extended cutoff distance.

stepspercycle 10 # Number of timesteps in each cycle. Each cycle represents the number of timesteps between atom reassignments. Default value is 20. pairlistsPerCycle 2 # How many times per cycle to regenerate pairlists. Default value is 2.

Selecting LJ Potential Truncation Method

GROMACS

Truncation of LJ potential is specified in the run parameter file mdp.

vdw-modifier = potential-shift ; Shifts the VDW potential by a constant such that it is zero at the rvdw. vdw-modifier = force-switch ; Smoothly switches the forces to zero between rvdw-switch and rvdw. vdw-modifier = potential-switch ; Smoothly switches the potential to zero between rvdw-switch and rvdw. vdw-modifier = none rvdw-switch = 1.0 ; Where to start switching. rvdw = 1.2 ; Cut-off distanceNAMD

Truncation of LJ potential is specified in the run parameter file mdin.

cutoff 12.0 # Cut-off distance common to both electrostatic and van der Waals calculations switching on # Turn switching on/off. The default value is off. switchdist 10.0 # Where to start switching vdwForceSwitching on # Use force switching for VDW. The default value is off.AMBER force fields

AMBER force fields are developed with hard truncation. Do not use switching or shifting with these force fields.

Selecting Cutoff Distance

GROMACS

Cutoff and neighbour searching is specified in the run parameter file mdp.

rlist = 1.0 ; Cutoff distance for the short-range neighbour list. Active when verlet-buffer-tolerance = -1, otherwise ignored. verlet-buffer-tolerance = 0.002 ; The maximum allowed error for pair interactions per particle caused by the Verlet buffer. To achieve the predefined tolerance the cutoff distance rlist is adjusted indirectly. To override this feature set the value to -1. The default value is 0.005 kJ/(mol ps).NAMD

When run in parallel NAMD uses a combination of spatial decomposition into grid cells (patches) and Verlet lists with extended cutoff distance.

pairlistdist 14.0 # Distance between pairs for inclusion in pair lists. Should be bigger or equal than the cutoff. The default value is cutoff. cutoff 12.0 # Local interaction distance. Same for both electrostatic and VDW interactions.

Key Points

The calculation of non-bonded forces is the most computationally demanding part of a molecular dynamics simulation.

Non-bonded interactions are truncated to speed up simulations.

The cutoff distance should be appropriate for the force field and the size of the periodic box.

Advancing Simulation in Time

Overview

Teaching: 20 min

Exercises: 0 minQuestions

How is simulation time advanced in a molecular dynamics simulation?

What factors are limiting a simulation time step?

How to accelerate a simulation?

Objectives

Understand how simulation is advanced in time.

Learn how to choose time parameters and constraints for an efficient and accurate simulation.

Learn how to specify time parameters and constraints in GROMACS and NAMD.

Introduction

To simulate evolution of the system in time we need to solve Newtonian equations of motions. As the exact analytical solution for systems with thousands or millions of atoms is not feasible, the problem is solved numerically. The approach used to find a numerical approximation to the exact solution is called integration.

To simulate evolution of the system in time the integration algorithm advances positions of all atoms by a small step \(\delta{t}\) during which the forces are considered constant. If the time step is small enough the trajectory will be reasonably accurate. A good integration algorithm must be energy conserving. Energy conservation is important for calculation of correct thermodynamic properties. It energy is not conserved simulation may even become unstable in severe cases due to energy going to infinity. Time reversibility is important because it guarantees conservation of energy, angular momentum and any other conserved quantity. Thus good integrator for MD should be time-reversible and energy conserving.

Integration Algorithms

- To simulate evolution of the system in time we need to solve Newtonian equations of motions.

- The exact analytical solution is not feasible, the problem is solved numerically.

- The approach used to find a numerical approximation to the exact solution is called integration.

- The integration algorithm advances positions of all atoms by small time steps \(\delta{t}\).

- If the time step is small enough the trajectory will be reasonably accurate.

- A good integration algorithm for MD should be time-reversible and energy conserving.

The Euler Algorithm

The Euler algorithm is the simplest integration method. It assumes that acceleration does not change during time step. In reality acceleration is a function of coordinates, it changes when atoms move.

- The simplest integration method

The Euler algorithm uses the second order Taylor expansion to estimate position and velocity at the next time step.

Essentially this means: using the current positions and forces calculate the velocities and positions at the next time step.

- Use $\boldsymbol{r}, \boldsymbol{v},\boldsymbol{a}$ at time $t$ to compute $\boldsymbol{r}(t+\delta{t})$ and $\boldsymbol{v}(t+\delta{t})$:

$\qquad\boldsymbol{r}(t+\delta{t})=\boldsymbol{r}(t)+\boldsymbol{v}(t)\delta{t}+\frac{1}{2}\boldsymbol{a}(t)\delta{t}^2$

$\qquad\boldsymbol{v}(t+\delta{t})=\boldsymbol{v}(t)+\frac{1}{2}\boldsymbol{a}(t)\delta{t}$

Euler’s algorithm is neither time-reversible nor energy-conserving, and as such is rather unfavorable. Nevertheless, the Euler scheme can be used to integrate some other than classical MD equations of motion.

For example, GROMACS offers a Euler integrator for Brownian or position Langevin dynamics.

- Assumes that acceleration does not change during time step.

- In reality acceleration is a function of coordinates, it changes when atoms move.

Drawbacks:

- Not energy conserving

- Not reversible in time

Applications

- Not recommended for classical MD

- Can be used to integrate some other equations of motion. For example, GROMACS offers a Euler integrator for Brownian (position Langevin) dynamics.

The original Verlet Algorithm

Verlet improved the Euler integration by using positions at two successive time steps. Using positions from two time steps ensured that acceleration changes were taken into account. Essentially, this algorithm is as follows: calculate the next positions using the current positions, forces, and previous positions:

$\qquad\boldsymbol{r}(t+\delta{t})=2\boldsymbol{r}(t)-\boldsymbol{r}(t-\delta{t})+\boldsymbol{a}(t)\delta{t}^2$

- The Verlet algorithm (Verlet, 1967) requires positions at two time steps. It is inconvenient when starting a simulation when only current positions are available.

While velocities are not needed to compute trajectories, they are useful for calculating observables e.g. the kinetic energy. The velocities can only be computed once the next positions are calculated:

$\qquad\boldsymbol{v}(t+\delta{t})=\frac{r{(t+\delta{t})-r(t-\delta{t})}}{2\delta{t}}$

The Verlet algorithm is time-reversible and energy conserving.

The Velocity Verlet Algorithm

Euler integrator can be improved by introducing evaluation of the acceleration at the next time step. You may recall that acceleration is a function of atomic coordinates and is determined completely by interaction potential.

At each time step, the following algorithm is used to calculate velocity, position, and forces:

- The velocities, positions and forces are calculated at the same time using the following algorithm:

- Use $\boldsymbol{r}, \boldsymbol{v},\boldsymbol{a}$ at time $t$ to compute $\boldsymbol{r}(t+\delta{t})$: $\qquad\boldsymbol{r}(t+\delta{t})=\boldsymbol{r}(t)+\boldsymbol{v}(t)\delta{t}+\frac{1}{2}\boldsymbol{a}(t)\delta{t}^2$

- Derive $ \boldsymbol{a}(t+\delta{t})$ from the interaction potential using new positions $\boldsymbol{r}(t+\delta{t})$

- Use both $\boldsymbol{a}(t)$ and $\boldsymbol{a}(t+\delta{t})$ to compute $\boldsymbol{v}(t+\delta{t})$: $\quad\boldsymbol{v}(t+\delta{t})=\boldsymbol{v}(t)+\frac{1}{2}(\boldsymbol{a}(t)+\boldsymbol{a}(t+\delta{t}))\delta{t} $

- The Verlet algorithm is time-reversible and energy conserving.

Mathematically, Velocity Verlet is equivalent to the original Verlet algorithm. Unlike the basic Verlet algorithm, this algorithm explicitly incorporates velocity, eliminating the issue of the first time step.

The Velocity Verlet algorithm is mathematically equivalent to the original Verlet algorithm. It explicitly incorporates velocity, solving the problem of the first time step in the basic Verlet algorithm.

- The Velocity Verlet algorithm is the most widely used algorithm in MD simulations because of its simplicity and stability

- Due to its simplicity and stability the Velocity Verlet has become the most widely used algorithm in the MD simulations.

Leap Frog Variant of Velocity Verlet

The Leap Frog algorithm is essentially the same as the Velocity Verlet. The Leap Frog and the Velocity Verlet integrators give equivalent trajectories. The only difference is that the velocities are not calculated at the same time as positions. Leapfrog integration is equivalent to updating positions and velocities at interleaved time points, staggered in such a way that they “leapfrog” over each other. The only practical difference between the velocity Verlet and the leap-frog is that restart files are different.

- The leap frog algorithm is a modified version of the Verlet algorithm.

- The only difference is that the velocities are not calculated at the same time as positions.

- Positions and velocities are computed at interleaved time points, staggered in such a way that they “leapfrog” over each other.

Velocity, position, and forces are calculated using the following algorithm:

- Derive $ \boldsymbol{a}(t)$ from the interaction potential using positions $\boldsymbol{r}(t)$

- Use $\boldsymbol{v}(t-\frac{\delta{t}}{2})$ and $\boldsymbol{a}(t)$ to compute $\boldsymbol{v}(t+\frac{\delta{t}}{2})$: $\qquad\boldsymbol{v}(t+\frac{\delta{t}}{2})=\boldsymbol{v}(t-\frac{\delta{t}}{2}) + \boldsymbol{a}(t)\delta{t}$

- Use current $\boldsymbol{r}(t)$ and $\boldsymbol{v}(t+\frac{\delta{t}}{2})$ to compute $\boldsymbol{r}(t+\delta{t})$ : $\qquad\boldsymbol{r}(t+\delta{t})=\boldsymbol{r}(t)+\boldsymbol{v}(t+\frac{\delta{t}}{2})\delta{t}$

- The Leap Frog and the Velocity Verlet integrators give equivalent trajectories.

- Restart files are different

Discontinuities in simulations occur when the integrator is changed

Since velocities in Leap-frog restart files are shifted by half a time step from coordinates, changing the integrator to Verlet will introduce some discontinuity.

Integrator pairs of coordinates ($r$) and velocities ($v$) Velocity-Verlet $[r_{t=0}, v_{t=0}], [r_{t=1}, v_{t=1}], [r_{t=2}, v_{t=2}], \ldots $ Leap-Frog $[r_{t=0}, v_{t=0.5}], [r_{t=1}, v_{t=1.5}], [r_{t=2}, v_{t=2.5}], \ldots $ A simulation system will experience an instantaneous change in kinetic energy. That makes it impossible to transition seamlessly between integrators.

Selecting the Integrator

GROMACS

Several integration algorithms available in GROMACS are specified in the run parameter mdp file.

integrator = md ; A leap frog algorithm integrator = md-vv ; A velocity Verlet algorithm integrator = md-vv-avek ; A velocity Verlet algorithm same as md-vv except the kinetic energy is calculated as the average of the two half step kinetic energies. More accurate than the md-vv. integrator = sd ; An accurate leap frog stochastic dynamics integrator. integrator = bd ; A Euler integrator for Brownian or position Langevin dynamics.NAMD

The only available integration method is Verlet.

How to Choose Simulation Time Step?

Larger time step allows to run simulation faster, but accuracy decreases.

Mathematically Verlet family integrators are stable for time steps:

\(\qquad\delta{t}\leq\frac{1}{\pi{f}}\qquad\) where \(f\) is oscillation frequency.

- Verlet family integrators are stable for time steps: \(\delta{t}\leq\frac{1}{\pi{f}}\) where \(f\) is oscillation frequency.

In molecular dynamics stretching of the bonds with the lightest atom H is usually the fastest motion. The period of oscillation of a C-H bond is about 10 fs. Hence Verlet integration will be stable for time steps < 3.2 fs. In practice, the time step of 1 fs is recommended to describe this motion reliably.

- Vibrations of bonds with hydrogens have period of 10 fs

- Bond vibrations involving heavy atoms and angles involving hydrogen atoms have period of 20 fs

If the dynamics of hydrogen atoms is not essential for a simulation, bonds with hydrogens can be constrained. By replacing bond vibrations with holonomic (not changing in time) constraints the simulation step can be doubled since the next fastest motions (bond vibrations involving only heavy atoms and angles involving hydrogen atoms) have a period of about 20 fs. Further increase of the simulation step requires constraining bonds between all atoms and angles involving hydrogen atoms. Then the next fastest bond vibration will have 45 fs period allowing for another doubling of the simulation step.

To accelerate a simulation the electrostatic interactions outside of a specified cutoff distance can be computed less often than the short range bonded and non-bonded interactions. It is also possible to employ an intermediate timestep for the short-range non-bonded interactions, performing only bonded interactions every timestep.

- Stretching of bonds with the lightest atom H is the fastest motion.

- As period of oscillation of a C-H bond is about 10 fs, Verlet integration is stable for time steps < 3.2 fs.

- In practice, the time step of 1 fs is recommended to describe this motion reliably.

- Simulation step can be doubled by constraining bonds with hydrogens.

- Further increase of the simulation step requires constraining bonds between all atoms and angles involving hydrogen atoms.

Other ways to increase simulation speed

- Compute long range electrostatic interactions less often than the short range interactions.

- Employ an intermediate timestep for the short-range non-bonded interactions, performing only bonded interactions at each timestep.

- Hydrogen mass repartitioning allows increasing time step to 4 fs.

Specifying Time Parameters

GROMACS

Time parameters are specified in the mdp run parameter file.

dt = 0.001 ; Time step, ps nsteps = 10000 ; Number of steps to simulate tinit = 0 ; Time of the first stepNAMD

Time parameters are specified in the mdin run parameter file.

TimeStep = 1 # Time step, fs NumSteps = 10000 # Number of steps to simulate FirstTimeStep = 0 # Time of the first step # Multiple time stepping parameters nonbondedFreq 2 # Number of timesteps between short-range non-bonded evaluation. fullElectFrequency 4 # Number of timesteps between full electrostatic evaluations

Constraint Algorithms

To constrain bond length in a simulation the equations of motion must be modified. Constraint forces acting in opposite directions along a bond are usually applied to accomplish this. The total energy of the simulation system is not affected in this case because the total work done by constraint forces is zero. In constrained simulation, the unconstrained step is performed first, then corrections are applied to satisfy constraints.

- To constrain bond length in a simulation the equations of motion must be modified.

- The goal is to constrain some bonds without affecting dynamics and energetics of a system.

- One way to constrain bonds is to apply constraint force acting along a bond in opposite direction.

In constrained simulation first the unconstrained step is done, then corrections are applied to satisfy constraints.

Since bonds in molecules are coupled, satisfying all constraints is a complex nonlinear problem. Is it fairly easy to solve it for a small molecule like water but as the number of coupled bonds increases, the problem becomes more difficult. Several algorithms have been developed for use specifically with small or large molecules.

- As bonds in molecules are coupled satisfying all constraints in a molecule becomes increasingly complex for larger molecules.

- Several algorithms have been developed for use specifically with small or large molecules.

SETTLE

SETTLE is very fast analytical solution for small molecules. It is widely used to constrain bonds in water molecules.

- Very fast analytical solution for small molecules.

- Widely used to constrain bonds in water molecules.

SHAKE

SHAKE is an iterative algorithm that resets all bonds to the constrained values sequentially until the desired tolerance is achieved. SHAKE is simple and stable, it can be applied for large molecules and it works with both bond and angle constraints. However it is substantially slower than SETTLE and hard to parallelize. SHAKE may fail to find the constrained positions when displacements are large. The original SHAKE algorithm was developed for use with a leap-frog integrator. Later on, the extension of SHAKE for use with a velocity Verlet integrator called RATTLE has been developed. Several other extensions of the original SHAKE algorithm exist (QSHAKE, WIGGLE, MSHAKE, P-SHAKE).

- Iterative algorithm that resets all bonds to the constrained values sequentially until the desired tolerance is achieved.

- Simple and stable, it can be applied for large molecules.

- Works with both bond and angle constraints.

- Slower than SETTLE and hard to parallelize.

- SHAKE may fail to find the constrained positions when displacements are large.

Extensions of the original SHAKE algorithm: RATTLE, QSHAKE, WIGGLE, MSHAKE, P-SHAKE.

LINCS

LINCS algorithm (linear constraint solver), employs a power series expansion to determine how to move the atoms such that all constraints are satisfied. It is 3-4 times faster than SHAKE and easy to parallelize. The parallel LINCS (P-LINCS) allows to constrain all bonds in large molecules. The drawback is that it is not suitable for constraining both bonds and angles.

- Linear constraint solver

- 3-4 times faster than SHAKE and easy to parallelize.

- The parallel LINCS (P-LINCS) allows to constrain all bonds in large molecules.

- Not suitable for constraining both bonds and angles.

Specifying Constraints

GROMACS

SHAKE, LINCS and SETTLE constraint algorithms are implemented. They are selected via keywords in mdp input files

constraints = h-bonds ; Constrain bonds with hydrogen atoms constraints = all-bonds ; Constrain all bonds constraints = h-angles ; Constrain all bonds and additionally the angles that involve hydrogen atoms constraints = all-angles ; Constrain all bonds and angles constraint-algorithm = LINCS ; Use LINCS constraint-algorithm = SHAKE ; Use SHAKE shake-tol = 0.0001 ; Relative tolerance for SHAKE, default value is 0.0001.SETTLE can be selected in the topology file:

[ settles ] ; OW funct doh dhh 1 1 0.1 0.16333NAMD

SHAKE and SETTLE constraint algorithms are implemented. They are selected via keywords in simulation input file.

rigidBonds water # Use SHAKE to constrain bonds with hydrogens in water molecules. rigidBonds all # Use SHAKE to constrain bonds with hydrogens in all molecules. rigidBonds none # Do not constrain bonds. This is the default. rigidTolerance 1.0e-8 # Stop iterations when all constrained bonds differ from the nominal bond length by less than this amount. Default value is 1.0e-8. rigidIterations 100 # The maximum number of iterations. If the bond lengths do not converge, a warning message is emitted. Default value is 100. rigidDieOnError on # Exit and report an error if rigidTolerance is not achieved after rigidIterations. The default value is on. useSettle on # If rigidBonds are enabled then use the SETTLE algorithm to constrain waters. The default value is on.

Key Points

A good integration algorithm for MD should be time-reversible and energy conserving.

The most widely used integration method is the velocity Verlet.

Simulation time step must be short enough to describe the fastest motion.

Time step can be increased if bonds involving hydrogens are constrained.

Additional time step increase can be achieved by constraining all bonds and angles involving hydrogens.

Periodic Boundary Conditions

Overview

Teaching: 20 min

Exercises: 0 minQuestions

How to simulate a bulk (gas, liquid or solid) system by using only a small part?

Objectives

Understand why and when periodic boundary conditions are used.

Understand how shape and size of a periodic box can affect simulation.

Learn how to set periodic box parameters in GROMACS and NAMD.

What is PBC and why is it important?

In most cases we want to simulate a system in realistic environment, such as solution. If one tries to simulate a protein in a droplet of water, it will simply evaporate.

The boundary to contain molecules in simulation is needed to preserve thermodynamic properties like temperature, pressure and density. The use of PBC in simulations allows the inclusion of bulk solvent or crystalline environments. In other words periodic boundary conditions make it possible to approximate an infinite system by using a small part (unit cell). A unit cell in MD is usually referred to as periodic box.

- In most cases we want to simulate a system in realistic environment, such as solution.

- Try simulating a droplet of water, it will simply evaporate.

- We need a boundary to contain water and control temperature, pressure, and density.

- Periodic boundary conditions allow to approximate an infinite system by using a small part (unit cell).

To implement PBC the unit cell is surrounded by translated copies in all directions to approximate an infinitely large system. When one molecule diffuses across the boundary of the simulation box it reappears on the opposite side. So each molecule always interacts with its neighbours even though they may be on opposite sides of the simulation box. This approach replaces the surface artifacts caused by the interaction of the isolated system with a vacuum with the PBC artifacts which are in general much less severe.

- Unit cell is surrounded by an infinite number of translated copies in all directions (images).

- When a particle in unit cell moves across the boundary it reappears on the opposite side.

- Each molecule always interacts with its neighbors even though they may be on opposite sides of the simulation box.

- Artifacts caused by the interaction of the isolated system with a vacuum are replaced with the PBC artifacts which are in general much less severe.

Choosing periodic box size and shape.

Box shape

Cubic periodic box

A cubic box is the most intuitive and common choice, but it is inefficient due to irrelevant water molecules in the corners. The extra water will make your simulation run slower.

- A cubic box is the most intuitive and common choice

- Cubic box is inefficient due to irrelevant water molecules in the corners.

Ideally you need a sufficiently large sphere of water surrounding the macromolecule, but that’s impossible because spheres can’t be packed to fill space.

- Ideal simulation system is a sphere of water surrounding the macromolecule, but spheres can’t be packed to fill space.

Octahedral and dodecahedral periodic boxes

A common alternatives that are closer to spherical are the dodecahedron (any polyhedron with 12 faces) or the truncated octahedron (14 faces). These shapes work reasonably well for globular macromolecules.

- The dodecahedron (12 faces) or the truncated octahedron (14 faces) are closer to sphere.

| Space filling with truncated octahedrons | |

|---|---|

- These shapes work reasonably well for globular macromolecules.

Triclinic periodic boxes

The triclinic box is the least symmetric of all types of periodic boxes. The triclinic system defines the unit cells by three basis vectors of unequal length, and the angles between these vectors must all be different from each other, and not 90 degrees.

In simulation packages, however, there usually are no such restrictions for triclinic boxes, which is why they are the most generic periodic boundary conditions. Any periodic box can be converted into a triclinic box with specific box vectors and angles.

- Any repeating shape that fills all of space has an equivalent triclinic unit cell.

- A periodic box of any shape can be represented by a triclinic box with specific box vectors and angles.

There are two reasons why triclinic boxes are useful: First, they can be used to simulate crystals that don’t have rectangular unit cells. In addition, the best triclinic cell has about 71% the volume of an ideal rectangular cell.

- The optimal triclinic cell has about 71% the volume of the optimal rectangular cell.

Box size

A good rule of thumb is to keep the box at least 10 Å away from the solute. Of course this assumes completely equilibrated system. If you are preparing a new system, make sure there is at least a margin of 13 Å between the solvent and the box, since the solvent will come closer to the solute during equilibration and the box will contract.

- The minimum box size should extend at least 10 nm from the solute.

In order to avoid short range interactions between a molecule and its images, the shortest periodic box vector should be at least twice as big as the cuf-off radius.

- The shortest periodic box vector should be at least twice bigger than the cuf-off radius.

The solvent molecules in simulations involving macromolecules should not “feel” both sides of a solute. In other words, there must be at least twice the cut-off distance between a macromolecule and any of its images.

- In simulations with macromolecules solvent molecules should not “feel” both sides of a solute.

When your simulation system is small make sure you do not set the cutoff too high!

Pitfalls

Solvated macromolecules rotate during simulations. Furthermore macromolecules may undergo conformational changes. Often these changes are of major interest and should not be restricted in any way.

Periodic boxes for elongated molecules are also elongated when the distance between the solute and the box is used to prepare them. If the smallest box dimension is not large enough rotation will result in the interaction between the molecule and its periodic images and lead to unphysically restricted dynamics. Thus, when setting up a periodic system, you must consider rotation of elongated macromolecules as well as possible changes in conformation.

Use of a cubical or dodecahedral box is one way to solve this problem. A disadvantage of such an approach is that you will need a lot of water to fill the box.

- A simulation system with elongated solute in cubic or dodecahedral box will have a large amount of water located far away from the solute.

- Consider using a narrow rectangular box.

- Rotation of elongated macromolecules and/or conformational changes must be taken in consideration.

Using a narrow box together with constraining rotational motion is more efficient [1]. Be aware, however, that the box shape itself may impact conformational dynamics by restricting motion in certain directions [2]. This effect may be significant when the amount of solvent is minimal.

- Constrain the rotational motion.

- The box shape itself may influence conformational dynamics by restricting motions in certain directions.

Specifying Periodic Box

GROMACS

The box specification is integrated into structure files. The box parameters can be set using the editconf program or manually. The editconf program accepts the following options:

-bt Box type triclinic, cubic, dodecahedron, octahedron -box Box vectors lengths, a, b, c nm -angles Box vectors angles, bc, ac, ab degrees -d Distance between the solute and the box nm Example:

module load StdEnv/2020 gcc gromacs wget http://files.rcsb.org/view/1lyz.pdb gmx pdb2gmx -f 1lyz.pdb -ff amber99sb-ildn -water spce -ignh gmx editconf -f conf.gro -o conf_boxed.gro -d 1.0 -bt cubicIn the example above the editconf program will append box vectors to the structure file ‘conf.gro’ and save it in the file ‘conf_boxed.gro’. The 9 components of the three box vectors are saved in the last line of the structure file in the order: xx yy zz xy xz yx yz zx zy. Three of the values (xy, xz, and yz) are always zeros because they are duplicates of (yx, zx, and zy). The values of the box vectors components are related to the unit cell vectors \(a,b,c,\alpha,\beta,\gamma\) from the CRYST1 record of a PDB file with the equations:

\[xx=a, yy=b\cdot\sin(\gamma), zz=\frac{v}{(a*b*\sin(\gamma))}\] \[xy=0, xz=0, yx=b\cdot\cos(\gamma)\] \[yz=0, zx=c\cdot\cos(\beta), zy=\frac{c}{\sin(\gamma)}\cdot(cos(\alpha)-cos(\beta)\cdot\cos(\gamma))\] \[v=\sqrt{1-\cos^2(\alpha)-cos^2(\beta)-\cos^2(\gamma) +2.0\cdot\cos(\alpha)\cdot\cos(\beta)\cdot\cos(\gamma)}\cdot{a}\cdot{b}\cdot{c}\]NAMD

Periodic box is specified in the run parameter file by three unit cell vectors, the units are Å.

# cubic box cellBasisVector1 100 0 0 cellBasisVector2 0 100 0 cellBasisVector3 0 0 100Alternatively periodic box parameters can be read from the .xsc (eXtended System Configuration) file by using the extendedSystem keyword. If this keyword is used cellBasisVectors are ignored. NAMD always generates .xsc files at runtime.

extendedSystem restart.xsc

Comparing periodic boxes

Using the structure file ‘conf.gro’ from the example above generate triclinic, cubic, dodecahedral and truncated octahedral boxes with the 15 Å distance between the solute and the box edge.

Which of the boxes will be the fastest to simulate?

References:

- Molecular dynamics simulations with constrained roto-translational motions: Theoretical basis and statistical mechanical consistency

- The effect of box shape on the dynamic properties of proteins simulated under periodic boundary conditions

- Periodic box types in Gromacs manual

Key Points

Periodic boundary conditions are used to approximate an infinitely large system.

Periodic box should not restrict molecular motions in any way.

The macromolecule shape, rotation and conformational changes should be taken into account in choosing the periodic box parameters.

Degrees of Freedom

Overview

Teaching: 10 min

Exercises: 0 minQuestions

How is kinetic energy contained and distributed in a dynamic molecular system

Why constraints are used in MD simulations, and how they can affect dynamics

Objectives

Molecular degrees of freedom

Molecular degrees of freedom refer to the number of unique ways a molecule may move (increase its kinetic energy). Thus the molecular degrees of freedom describe how kinetic energy is contained and distributed in a molecule. Dynamical molecular systems are characterized by numerous degrees of freedom. The motion of molecules can be decomposed into translational, rotational, and vibrational components.

- The number of unique ways a molecule may move (increase its kinetic energy).

- Describe how kinetic energy is contained and distributed in a molecule.

- Translational, rotational, and vibrational components.

Equipartition theorem

On average, when thermal energy is added to a system, the energy is shared equally among the degrees of freedom (equipartition theorem). In other words, classical equipartition principle states that every (quadratic) degree of freedom contributes equally to the total energy in thermal equilibrium. Each degree of freedom has an average energy of \(\frac{1}{2}k_BT\) and contributes \(\frac{1}{2}k_B\) to the system’s heat capacity.

- The energy is shared equally among the degrees of freedom (equipartition theorem).

- Each degree of freedom has an average energy of \(\frac{1}{2}k_BT\) and contributes \(\frac{1}{2}k_B\) to the system’s heat capacity.

Degrees of freedom and thermodynamics properties